Not all reading is alike: Task modulation of magnetic evoked response to visual word

Abstract

Background. Previous studies have shown that brain response to a written word depends on the task: whether the word is a target in a version of lexical decision task or should be read silently. Although this effect has been interpreted as an evidence for an interaction between word recognition processes and task demands, it also may be caused by greater attention allocation to the target word.

Objective. We aimed to examine the task effect on brain response evoked by non- target written words.

Design. Using MEG and magnetic source imaging, we compared spatial-temporal pattern of brain response elicited by a noun cue when it was read silently either without additional task (SR) or with a requirement to produce an associated verb (VG).

Results.The task demands penetrated into early (200-300 ms) and late (500-800 ms) stages of a word processing by enhancing brain response under VG versus SR condition. The cortical sources of the early response were localized to bilateral inferior occipitotemporal and anterior temporal cortex suggesting that more demanding VG task required elaborated lexical-semantic analysis. The late effect was observed in the associative auditory areas in middle and superior temporal gyri and in motor representation of articulators. Our results suggest that a remote goal plays a pivotal role in enhanced recruitment of cortical structures underlying orthographic, semantic and sensorimotor dimensions of written word perception from the early processing stages. Surprisingly, we found that to fulfil a more challenging goal the brain progressively engaged resources of the right hemisphere throughout all stages of silent reading.

Conclusion. Our study demonstrates that a deeper processing of linguistic input amplifies activation of brain areas involved in integration of speech perception and production. This is consistent with theories that emphasize the role of sensorimotor integration in speech understanding.

Received: 29.05.2017

Accepted: 13.06.2017

Themes: Cognitive psychology

PDF: http://psychologyinrussia.com/volumes/pdf/2017_3/psych_3_2017_14.pdf

Pages: 190-205

DOI: 10.11621/pir.2017.0313

Keywords: visual word recognition, top-down modulations, sensorimotor transformation, speech lateralization, magnetoencephalography (MEG)

Introduction

Visual word recognition lies at the heart of written language capacity. However, the usage of recognized information can vary greatly: a reader can pronounce the written word out loud, respond to it with another word or an action, or even scan through it without conscious access to its meaning. For a long period of time the dominant assumption in psycholinguisticshas been thatword recognitionis unaffectedby intention of the reader,in a sense that itis triggered automatically andobligatory, regardless of the task demands(see, e. g. Neely, & Kahan, 2001; Posner, & Snyder, 1975). Evidence to support this view comes from the Stroop effect (Stroop, 1935): it takes more time to name the color in which a word is written when the word and the color name conflict (e.g., the word red displayed in green font) compared to when the word is neutral with respect to color (e.g., book displayed in green font). Apparently, meaning of the words is activated despite being task-irrelevant or even disruptive for the task performance (see, e.g., MacLeod, 1991, 2005, Velichkovsky, 2006, for reviews).

The multiple priming studies also showed that a visually presented word elicits automatic access to words and their meaning (e.g., Forster, & Davis, 1984; Marcel, 1983). The priming refers to the consistent finding that processing of a word is facilitated if it is preceded by semantically related prime word (e.g., cat–dog) relative to when it is preceded by semantically unrelated word (e.g., ball–chair). If the prime is presented briefly (e.g. 30 ms) and immediately replaced by a mask (e.g. a pattern of symbols at the same spatial location as the prime), participants are usually unable to report having seen the prime but, nonetheless, respond faster and more accurate if it is semantically related to the target (see e.g., Carr, & Dagenbach, 1990; Marcel, 1983; Neely, 1991; Neely, & Kahan, 2001). Accepting that the mask procedure prevents the consolidation of long-lasting episodic memories and, therefore, any top-down guidance of word recognition, the masked priming was considered as a key evidence in favor of the theoretical view that language-processing system is an insulated cognitive module impervious to top-down control modifications (Fodor, 1983).

However, over the last decades new data challenged the claim of strict automaticity of word recognition. Several behavioral studies showed that the strength of Stroop effect depends on the context (e.g., Balota, & Yap, 2006; Besner, 2001; Besner, Stolz, & Boutilier, 1997). If only a single letter in a Stroop word is colored (rather than all the letters), or a single letter within a word is spatially pre-cued (rather than whole word), or a ratio of congruent–incongruent trials is low (20:80), the Stroop effect is significantly reduced (e.g. Besner, 2001). Moreover, there is evidence that the effects of masked primes and primes presented with very short prime-target SOA also can be modulated by such top-down factor as expectancy: decreasing the proportion of related primes leads to smaller priming effect (Balota, Black, & Cheney, 1992; Bodner, & Masson, 2004).

Non-automatic nature of word perception was also evidenced by the findings demonstrating that the goal of word-related task changed which characteristics of the perceived words were important for the performance. While word naming speed depends on word’s phonological onset variables (voicing, location, and manner of articulation of word first phonemes), the speed of lexical or semantic decision regarding the same words has been mostly influenced by their lexical frequency or imageability (Balota, Burgess, Cortese, & Adams, 2002; Balota, Cortese, Sergent-Marshall, Spieler, & Yap, 2004; Balota & Chumbley, 1984; Ferrand et al., 2011; Kawamoto, Kello, Higareda, & Vu, 1999; Schilling, Rayner, & Chumbley, 1998; Yap, Pexman, Wellsby, Hargreaves, & Huff, 2012).

Yet, the behavioral evidence alone cannot distinguish between two alternative ways the task may modulate word processing. One option is that the pursued goal affects word recognition, i.e. the way relevant information is retrieved from a written word. Alternatively, the goal may affect the following stage of decision making determining how to use the retrieved information to achieve the intended result. The information of exactly which stages of word recognition are affected could be obtained from electrophysiological studies which allow to detect exact timing when word recognition process is penetrated by task demand.

Electrophysiologically, the majority of research addressing the task effects on the brain response to written word was concentrated on the N400 component of event-related potentials (ERP) - a negative deflection that peaks at approximately 400 ms after stimulus-onset and is thought to reflect the processing of word semantics. These studies demonstrated that stronger emphasis placed on semantic attributes of words by the task demands enhanced N400 suggesting that a goal penetrates into word semantic processing (Bentin, Kutas, & Hillyard, 1993, 1995).

The recent neurophysiological studies have demonstrated that task demands can modulate word recognition at much earlier latencies than N400 time window. Ruz and Nobre (2008) in a cuing paradigm showed that a negative ERP deflection with peak at 200 msec post-stimulus was larger when the attention was oriented toward orthographic rather than phonological attributes of words. Strijkers with colleagues (2011) reported that the brain electrical response in reading aloud versus semantic categorization task starts to dissociate around 170 msec after a word onset. Similarly, Mahé, Zesiger and Laganaro (2015) observed early differences between lexical decision and reading aloud at ERP waveform from ~180 ms to the end of the analyzed interval (i.e., 500 ms). Chen and colleagues (2013) using MEG found that different task sets (lexical decision, semantic decision and silent reading) affect the word processing already at first 200 msec after word presentation. Stronger activation was observed for lexical decision in areas involved in orthographic and semantic processes (such as left inferior temporal cortex and bilateral anterior temporal lobe) Thus, early modulations of the word-related ERP are especially pronounced, if the task demands direct attention towards semantic or lexical features of the perceiving word. The early time window, where these effects occurred are theoretically consistent with the results of other studies demonstrating the early onset of “rudimentary” semantic analysis of the presented word (e.g. Kissler, Herbert, Winkler, & Junghofer, 2009).

Summing up, the discussed results provide substantial support for the view that even the earliest stages of word recognition are susceptible to top-down modulations when the presented word is a target for the task in hand. In the current study we aimed to examine whether the word processing is affected by the task when the presented word is just a cue which triggers a subsequent memory search for the target word. We compared brain response elicited by silent reading of a noun cue either without additional task (SR) or with a requirement to further produce an action verb associated with the cue (verb generation, VG). In contrast to the paradigms used in the previous studies, here the presented word is not an immediate processing target. Therefore, our experimental design enables us to reduce unspecific and very powerful effects of selective attention to a target word, which may well explain all the previously obtained results. Using MEG and distributed source estimation procedure, we aimed to characterize how intention to produce a related word changes the recognition process of the input word and triggers computation of articulatory output.

Method

Participants

Thirty-five volunteers (age range 20–48, mean age 26, 16 females) underwent MEG recording. All participants were native Russian-speakers, right-handed, had normal or corrected-to-normal vision and reported no neurological diseases or dyslexia. Two subjects were subsequently excluded from the analysis due to MEG acquisition error and another one due to insufficient quantity of correct responses in verb generation task. The final sample comprised 32 subjects. The study was approved by the ethical committee of the Moscow State University of Psychology and Education.

Materials

One hundred thirty Russian nouns were selected as stimuli for silent reading and verb generation tasks based on the criteria that the words were concrete and contained between 4 and 10 letters. The average word length was 5.7 letters. The word form frequency was obtained from Lyashevskaya and Sharov’s frequency dictionary (2009) and the average was 49.9 ipm.

Design and procedure

The participants were visually presented with the noun cues split into 14 blocks of 8 nouns each and 2 blocks containing 9 nouns. The cues within a block were randomized and presented in white font on a black background and presented on a screen placed at 1.5 m in front of the participant. The size of the stimuli did not exceed 5° of visual angle. The experiment was implemented in the Presentation software (Neurobehavioral Systems, Inc., Albany, California, USA).

Each noun was presented within two different experimental sessions. Within SR session the participant’s task was to read words inwardly. During reading task the stimulus was presented for 1000 ms, and the white fixation cross preceding the cue for 300 ms with a jitter between 0-200 ms. Within VG session a participant was required to produce the verb associated with a presented noun by answering the question what this noun does. For verb generation task each noun remained on the screen for 3500 ms and was preceded by white fixation cross presented for 300-500 ms.

In VG session participants’ vocal responses were tape recorded and checked for response's errors. The trials with semantically unrelated responses, incomprehensible verbalizations, imprecise vocalization onsets, and with pre-stimulus intervals overlapped with the vocal response to the previous stimulus were excluded from further analyses. As the verb responses were meant to be inflected for person and number and could be put into the reflexive form, we considered semantically correct but erroneously inflected verbs (e.g. “kvartyra - ubirayet/an apartment cleans” instead “kvartyra ubirayetsya/an apartment is cleaned”) as errors and also removed them from the subsequent analysis.

MEG data acquisition

MEG data were acquired inside a magnetically shielded room (AK3b, Vacuumschmelze GmbH, Hanau, Germany) using a dc-SQUID Neuromag™ Vector View system (Elekta-Neuromag, Helsinki, Finland) comprising 204 planar gradiometers and 102 magnetometers. Data were sampled at 1000 Hz and filtered with a band-passed 0.03-333 Hz filter. The participants' head shapes were collected with a 3Space Isotrack II System (Fastrak Polhemus, Colchester, VA) by digitizing three anatomical landmark points (nasion, left and right preauricular points) and additional randomly distributed points on the scalp. During the recording, the position and orientation of the head were monitored by four Head Position Indicator (HPI) coils. The electrooculogram (EOC) was registered with two pairs of electrodes located above and below the left eye and at the outer canthi of both eyes for recording of vertical and horizontal eye movements respectively. Structural MRIs were acquired for 28 participants with a 1.5 T Philips Intera system and were used for reconstruction of the cortical surface using Freesurfer software (http://surfer.nmr.mgh.harvard.edu/). Head models for the rest five participants failed to be obtained because of MRI acquisition error.

MEG pre-processing

The raw data were subjected to the temporal signal space separation (tSSS) method (Taulu, Simola, & Kajola, 2005) implemented in MaxFilter program (Elekta Neuromag software) aimed to suppress magnetic interference coming from sources distant to the sensor array. Biological artifacts (cardiac fields, eye movements, myogenic activity) were corrected using the SSP algorithm implemented in Brainstorm software (Tadel, Baillet, Mosher, Pantazis, & Leahy, 2011). To countervail for within-block head-movement (as measured by HPI coils) movement compensation procedure was applied. For sensor-space analysis, the data were converted to standard head position (x = 0 mm; y = 0 mm; z = 45 mm) across all blocks.

Data were divided into epochs of 1500 ms, from 500 ms before up to 1000 ms after stimulus onset. Epochs were rejected if the peak-to-peak value over the epoch exceeds 3 × 10-10 T/m (gradiometers) and 12 × 10-10 T/m (magnetometers) channels.

MEG data analysis

The difference in the magnitude of noun-evoked response during silent reading versus verb generation task was examined using Statistical Parametric Mapping software (SPM12: Wellcome Trust Centre for Neuroimaging, London,http://www.fil.ion.ucl.ac.uk/spm). For analysis of evoked magnetic fields the planar gradiometers data were converted to a Matlab-based, SPM format and baseline corrected over -350 - -50 prestimulus interval. The epoched data were averaged within each task separately, using a SPM built-in averaging procedure (Holland, & Welsch, 1977). The averaged data from the each pair of planar gradiometers were combined by calculating the root-mean-square values. The resulting 3D files of space (32 × 32 pixels) and time (1000 ms) dimensions were converted to images of Neuroimaging Informatics Technology Initiative (NIfTI) format.

For statistical analysis the topography x time images were smoothed in space-time using a Gaussian smoothing kernel with Full Width Half Maximum of 8 mm × 8 mm × 8 ms to ensure that the images conform to the assumptions of Random Field Theory (Kilner, & Friston, 2010). Then, the smoothed images from SR and VG tasks were subjected to a paired t-test. The resulting statistical parametric maps underwent the false discovery rate (FDR) correction with cluster-level threshold of p < 0.05. The clusters that survived cluster-level correction were used to guide the subsequent analysis in the source space.

The cortical sources of the evoked responses were modelled by a "depth-weighted" linear L2-minimum norm estimation method (Hämäläinen, & Ilmoniemi, 1994) implemented in Brainstorm software (Tadel et al., 2011). Only those 28 participants for whom MRI scans were obtained entered the source analysis. The individual ERFs for each task were computed by averaging the trials within the condition over a 350 ms prestimulus interval and a 1000 ms post-stimulus for each of the 306 sensors. The cortical sources of the evoked responses were modelled by a "depth-weighted" linear L2-minimum norm estimation method (Hämäläinen & Ilmoniemi, 1994). The individual cortical surfaces were imported from FreeSurfer and tessellated with 15 000 nodes. The forward solution was calculated using overlapping spheres approach (Huang, Mosher, & Leahy, 1999). The inverse solution was computed by brainstorm built-in minimum norm estimation algorithm applying with the default settings (“kernel only” as the output mode, 3 as the signal-to-noise ratio, the source orientation constrained to perpendicular to the cortical surface, the depth weighting restricting source locations to the cortical surface and the whitening PCA). A noise covariance matrix, necessary to control noise effects on the solution (Bouhamidi, & Jbilou, 2007) was calculated over -250 to -150 baseline interval (Dale et al., 2000).

The individual source maps were projected to the cortical surface of the Montreal Neurological Institute brain template (MNI-Colin27). Differences in source activation between verb generation and silent reading were tested via paired t-tests under significance level of p<0.05, uncorrected.

Results

Behavioral results

The average response time in overt verb generation task was 1.56 sec (SD = 0.2). Mean accuracy was 7% (SD = 3.9). The responses which were considered incorrect were removed from the subsequent analysis.

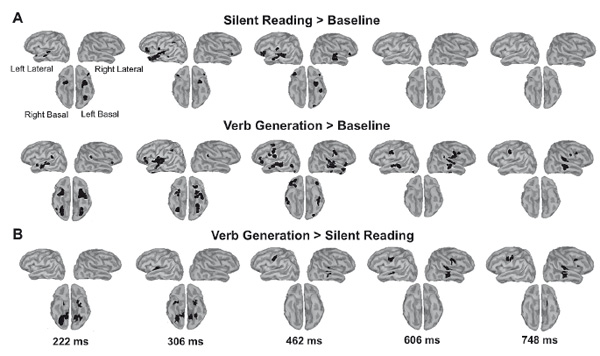

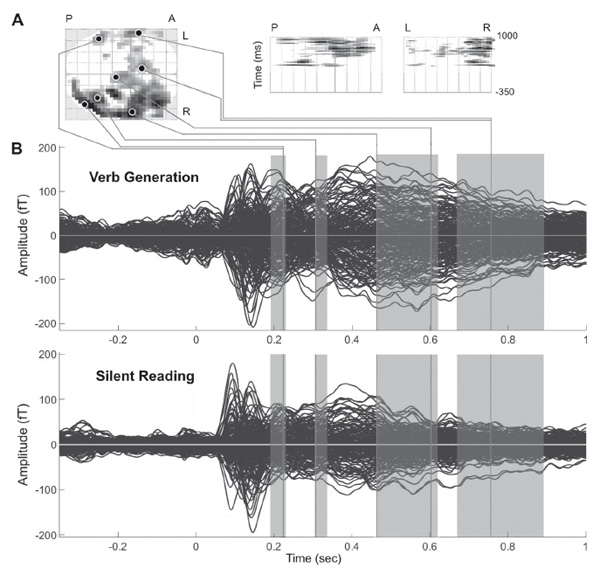

General time course of noun cue recognition

As shown in butterfly plots for VG and SR conditions (Figure 1B), the general pattern of the response to the written noun roughly coincided in both tasks, presumably reflecting the common process of word recognition. The noun presentation elicited the evoked response with two early narrow peaks around 100 and 140 ms after word onset followed by broader components at 200 and 400 ms. Based on extensive literature on visual word recognition (for recent reviews see Carreiras, Armstrong, Perea, & Frost, 2014; Grainger, & Holcomb, 2009) we identified the observed ERF deflections as MEG counterparts of P100, N170, N200 and N400 components established in EEG studies (e.g. Hauk, & Pulvermüller, 2004; Holcomb, & Grainger, 2006; Kutas, & Federmeier, 2011; Maurer, Brandeis, & McCandliss, 2005). MNE source estimation revealed that the response emerged around 100 ms at the occipital cortex bilaterally, then shifted toward the inferior occipitotemporal cortex at 140 ms with a prominent left-hemispheric preponderance (the results are presented elsewhere (Butorina et al, submitted)). By 190 ms the activation reached the anterior part of temporal lobes (ATL) bilaterally. During 200-300 ms the response engaged also the lateral surface of the left hemisphere, namely ventrolateral prefrontal cortex (VLPFC), superior and middle temporal gyri (STG, MTG) and the perisylvian region (Figure 2A). Around 460 ms the response in the temporal cortex became bilateral, and was accompanied by left-lateralized activity in ventral pericentral region and VLPFC. After 600 ms the response in SR decreased rapidly in contrast to VG where bilateral temporal and pericentral activity was present for another two hundreds milliseconds - up to 800 ms post-stimulus. The spatial-temporal patterns observed under SR and VG conditions are in good correspondence with features of the brain response described for word recognition (e.g. Dien, 2009; Grainger, & Holcomb, 2009; Pulvermüller, Shtyrov, & Hauk, 2009; Salmelin, 2007), thus, confirming that both tasks consecutively activated the same brain areas associated with the written word processing.

Figure 1. The brain response to the written nouns in verb generation (VG) and silent reading (SR) tasks: sensor-level analysis. (A) Three projections (SPM glass image) show the sensor array from above (transverse), the right (sagittal), and the back (coronal). A — anterior, P — posterior, L-left and R-right parts of the array. Areas in black correspond to spatial clusters with significant sensor-level differences in ERF between VG and SR task (paired t-test, p < 0.05, FDR-corrected). All the clusters reflect greater response under VG versus SR condition and occur within four time windows of 191–227 ms, 306–340 ms, 462–619 ms and 676–891 ms after the noun onset. The local peaks are reported as small black circles. Note, that no clusters with opposite direction of the effect were found. (B) Butterfly plots of MEG evoked waveforms from 306 MEG channels. Strength of magnetic fields is represented in femto-Tesla (fT). Zero point denotes the onset of the noun cue. The increase of the response around zero is presumably related to the stronger attention allocation to the fixation cross under VG condition. Shaded rectangles denote the time windows with significant VG-SR difference. The gray lines indicate the time points of the local peaks of SPM clusters.

Figure 2. Reconstructed cortical activation that contributes to significant SR-VG differences. The time points correspond to the temporal peaks of sensor-level significant difference. (A) Cortical response evoked by visually presented noun in verb generation and silent reading task. Note that noun-evoked activation progressed along the posterior-anterior axis from posterior sensory regions to more anterior multimodal association areas. (B) The reconstruction of cortical sources displaying greater response in VG versus SR task. All images were thresholded using a voxel-wise statistical threshold of p<0.05 with cluster size more than 10 voxels.

Task effects on noun cue recognition

Sensor-level analysis

Figure 1A presents the spatial-temporal clusters of sensor-level SR-VG differences that survived cluster-level FDR correction with a threshold at p<0.05. The evoked response to a written noun word was stronger in VG compared to SR task for all the significant clusters, while no clusters demonstrating the opposite direction of the task effect were found. As shown in the butterfly plots (Figure 1B) two earliest components at 100 and 140 ms (P100m, N170m) remained unaffected by the task demands. The initial task-related difference in the response strength appeared at time window of N200m component - at 191-227 ms - and was concentrated over the posterior boundary sensors on the right side of the array (p<0.0001, FDR-corr.) and over the lateral sensors on the left (p<0.0001, FDR-corr.). The following cluster of differential response emerged at 306-340 ms over the postetior-lateral sensors of the right hemisphere (p<0.0001, FDR-corr.). After that, the peak of differential activity shifted to the anterior half of the sensor array. Time window from 460 to 900 ms post-stimulus was dominated by the widespread clusters over the right anterior and lateral sensors at 462-619 and 676-891 ms time window (p<0.0001, FDR-corr.) while we also detected the symmetrical but smaller clusters of SR-VG difference in the left-hemispheric part of the sensor array. Thus, the task effects on written word processing were highly reliable and were confined to four consecutive time windows with gradually increasing duration.

Source-level analysis

To determine the cortical areas that contribute into significant SR-VG differences in the brain response at the sensor-level we used temporal clusters as a mask defining the time windows of interest for source-level analysis. Given that the source-space analysis was guided by FDR-corrected sensor-level results, the statistical threshold in the source-space was defined at p<0.05 (peak-level, uncorrected) with cluster size more than 10 adjunct voxels.

The early time window - 191-227 ms post-stimulus - was characterized by the differential response at the regions on the basal surface of the occipitotemporal lobes (Figure 2B). The activity in the left ATL and the left inferior occipitotemporal cortex was presented under both conditions but was stronger in VG than in SR, while the right occipitotemporal region was recruited into the response only under VG condition. During following time window at 306-340 ms the task effect in the ATL and inferior occipitotemporal cortex was bilateral and also engaged the left transverse gyrus comprising primary auditory cortex. At 462 ms the difference in the response was observed in the right STG and MTG and in the left ventral sensorimotor cortex - in the inferior region of left precentral and postcentral gyri. The differential activation of these regions spread to homotopic areas of both hemispheres, reached its maximum at 600 ms, and then sustained for another 200 ms up to the end of measurable brain response.

Discussion

Here we present MEG evidence that task demands penetrate into early (200-300 ms) and late (500-800 ms) stages of written word processing. We varied task demands for silent reading of a written noun word by imposing an instruction either to perform no further action (silent reading - SR) or to name a related verb afterwards (verb generation - VG). The long verb production time (1.5 s on average) in the latter case precluded the possibility that any changes in the processing of a written noun within 100 - 800 ms after its presentation onset was simply elicited by a preparation of motor response.

Our data indicates that top–down modulation affects relatively early processes in visual word recognition. More difficult task demands enhanced brain response at the stage of word form processing (Figure 2), while spared the low-level visual processing of stimulus features, i.e. contrast, figure-ground segregation etc. A lack of task effects on average word activation at the latency of the P100m and N100m component (Figure 1) signified that our tasks were similar with respect to the visual attention, which is known to increase these ERP components (e.g. Hillyard & Anllo-Vento, 1998). The visual word form area (VWFA) located in the left inferior occipitotemporal cortex and the left temporal pole were the first brain areas to show the elevated response to VG as compared to SR condition at 190-230 ms (Figure 2). With 80 ms delay the similar differential activation was observed in the homotopic areas of the right hemisphere (Figure 2). While VWFA is thought to recognize a letter string as a word form stored in long-term memory (Cohen et al., 2000; Dehaene, & Cohen, 2011), bilateral regions of temporal pole have been assigned a role of a semantic hub linking word forms with distributed representations of the same word in different sensory modalities (Binney, Embleton, Jefferies, Parker, & Lambon Ralph, 2010; Patterson, Nestor, & Rogers, 2007). Greater engagement of the temporal pole within 200-250 ms window in VG task may promote early semantic analysis of “word meaningfulness”, which, according to EEG reading research, also peaks around 250 ms after the word onset (for a review see Martín-Loeches, 2007).

Moreover, our findings clearly contradict the claims that the role of VWFA is “strictly visual and prelexical” (Dehaene, & Cohen, 2011). Our observation of effects "what-I-will-do-next-with this word" on activation in VWFA and more anterior part of ventral temporal lobe lends a firm support to an interactive view on the role of VWFA and other areas of ventral visual stream in word processing (Price, & Devlin, 2011).

In this respect, we extend and substantiate the previously existing literature, which implied VWFA activity to be sensitive to semantic or lexical decision directly related to a written word (Chen, Davis, Pulvermüller, & Hauk, 2015), and consequently was not immune to the unspecific modulatory effect of “word targetness”. Our data revealed a greater involvement of VWFA and its right hemispheric counterpart even in the case when the presented word by itself did not represent the target of the task. We speculate that a participant's need to further proceed with the retrieved information regarding the written word intensifies and deepens visual processing within the first 200-300 msec after a word presentation through integration of the task demands with the processing of immediate visual input.

The shift of activation from basal posterior temporal to lateral anterior areas of temporal and frontal lobes (Figure 2) at the latencies of N400m was common for both SR and VG tasks and indicated that the crucial point of the word recognition process in each task was the retrieval of word semantics. It may therefore surprise that we did not find any reliable task effects within the time window of "semantic" N400 component (Figure 2). Our VG task required participants to choose the verb associated with the presented noun, thus, encouraging the retrieval of noun's semantic features related to actions, and the difficulty of such retrieval did affect the "semantic brain activity" within N400 time window in our previous analysis (Butorina et al., submitted). One possibility is that the existing task effect did not survive the rigorous statistical corrections performed in the current study. Certainly, further research is needed to clarify the origin of this puzzling result.

In addition to the early task modulations, it is also striking that we found a robust but late (500 ms after the noun onset) and temporally protracted (500 - 800 ms) effect of task demands on the activity of lateral temporal regions and to a lesser extent of ventral posterior frontal regions. Interestingly, this effect was more prominent in the right hemisphere. This finding is not supported by the previous literature that similarly to our study used non-invasive MEG recording of human brain activity. However, our results are fully consistent with those obtained with subdural electrodes implanted in various brain regions of pre-surgical epilepsy patients. In this study the patients performed different variants of an overt word repetition task thoughtfully designed to check a hypothesis on the role of sensorimotor activations in speech perception (Cogan et al., 2014). Critically, the ECoG study observed that word presentation elicited a robust bilateral activation in the middle temporal gyrus, superior temporal gyrus, somatosensory, motor and premotor cortex, as well as in supramarginal gyrus and inferior frontal gyrus, i.e. roughly in the same cortical regions and at the same latency as in the current MEG study. Manipulating various task demands the authors proved that this bilateral activation represents sub-vocal sensorimotor transformations that is specific for speech and unifies perception- and production-based representations to facilitate access to higher order language functions. Given the evidence, our findings on enhanced late response in the same areas during even more demanding task may reflect enhanced sensorimotor transformation for the already perceived noun in case of a need for further decision making.

A long-standing notion is that motor system may contribute to speech perception by internally emulating sensory consequences of articulatory gestures (Liberman & Mattingly, 1985; Liberman & Wahlen, 2000). This does not mean that this mechanism is necessary to understand speech. Rather it serves as an assisting device when word-related decision making is facing difficult circumstances. Previous studies have shown that consulting internal sensorimotor models ameliorates speech perception when the speech input is ambiguous and/or noisy (Meister et al., 2007; Möttönen and Watkins, 2009). Our study reveals the same mechanism coming into play when there is a need to maintain and manipulate with perceived written noun in the working memory in order to produce its verb associates. Reverberating sensorimotor loops related to inner phonemic emulation of the presented word has been linked to phonological working memory by other authors (Hickok, & Poeppel, 2007; Buchsbaum et al., 2011). However, our results demonstrates for the first time that an intensified engagement of ventral motor cortex produced by a need of deep word processing is automatic and does not happened consciously as a sub-vocal rehearsal.

Intriguingly, higher task demands in our study seem to increasingly recruit the right hemisphere networks in such transformative activity. In accord with Cogan's et al hypothesis our MEG data obtained in typical participants argue against the prevailing dogma that dorsal stream sensorimotor functions are highly left lateralized. Thus, the current study contributes into the growing evidence from lesion, imaging, and electrophysiological data demonstrating convincingly the complex lateralization patterns for different language operations (for review, see Poeppel, 2014).

Conclusion

Our results suggest that a remote goal plays a pivotal role in enhanced recruitment of cortical structures underlying orthographic, semantic and sensorimotor dimensions of written word perception from the early processing stages. They also show that passive speech perception induces activation of brain areas involved in speech production. The increased recruitment of these areas in a more demanding task could reflect an automatic mapping of phonemes onto the articulatory motor programs – the process involved in covert imitative mechanisms or internal speech, which might, in turn, improve comprehension of the percept. During silent reading a need in deep processing of linguistic input may play a central role in linking speech perception with speech production, consistent with theories that emphasize the integration of sensory and motor representations in understanding speech (Hickok, & Poeppel, 2000; Scott, & Wise, 2004). Surprisingly, we found that in order to fulfil a more challenging goal the brain progressively engaged the resources of the right-hemisphere throughout all stages of silent reading. This conclusion fits well with mounting evidence on the role of right hemisphere in speech perception and higher-order cognition (Velichkovsky, Krotkova, Sharaev, & Ushakov,2017).

Acknowledgments

This study was in part supported by the Russian Science Foundation, grant RScF 14-28-00234. We wish to thank B.M. Velichkovsky for discussion of results.

References

Balota, D. A., Black, S. R., & Cheney, M. (1992). Automatic and attentional priming in young and older adults: Reevaluation of the two-process model. Journal of Experimental Psychology: Human Perception and Performance, 18(2), 485-502. doi: 10.1037/0096-1523.18.2.485

Balota, D. A., Burgess, G. C., Cortese, M. J., & Adams, D. R. (2002). The word-frequency mirror effect in young, old, and early-stage Alzheimer’s disease: Evidence for two processes in episodic recognition performance. Journal of Memory and Language, 46(1), 199-226. https://doi.org/10.1006/jmla.2001.2803

Balota, D. A., & Chumbley, J. I. (1984). Are lexical decisions a good measure of lexical access? The role of word frequency in the neglected decision stage. Journal of Experimental Psychology: Human Perception and Performance,10(3), 340–357. doi: 10.1037/0096-1523.10.3.340

Balota, D. A., Cortese, M. J., Sergent-Marshall, S. D., Spieler, D. H., & Yap, M. J. (2004). Visual word recognition of single-syllable words.Journal of Experimental Psychology: General, 133(2), 283–316. doi: 10.1037/0096-3445.133.2.283

Balota, D. A., & Yap, M. J. (2006). Attentional control and the flexible lexical processor: Explorations of the magic moment of word recognition. From Inkmarks to Ideas: Current Issues in Lexical Processing, 229.

Bentin, S., Kutas, M., & Hillyard, S. A. (1993). Electrophysiological evidence for task effects on semantic priming in auditory word processing. Psychophysiology,30(2), 161–169. doi: 10.1111/j.1469-8986.1993.tb01729.x

Bentin, S., Kutas, M., & Hillyard, S. A. (1995). Semantic processing and memory for attended and unattended words in dichotic listening: Behavioral and electrophysiological evidence. Journal of Experimental Psychology: Human Perception and Performance, 21(1), 54–67. doi: 10.1037/0096-1523.21.1.54

Besner, D. (2001). The myth of ballistic processing: Evidence from Stroop’s paradigm. Psychonomic Bulletin & Review, 8(2), 324–330. doi: 10.3758/BF03196168

Besner, D., Stolz, J. A., & Boutilier, C. (1997). The stroop effect and the myth of automaticity. Psychonomic Bulletin & Review, 4(2), 221–225. doi: 10.3758/BF03209396

Binney, R. J., Embleton, K. V., Jefferies, E., Parker, G. J. M., & Lambon Ralph, M. A. (2010). The ventral and inferolateral aspects of the anterior temporal lobe are crucial in semantic memory: evidence from a novel direct comparison of distortion-corrected fMRI, rTMS, and semantic dementia. Cerebral Cortex, 20(11), 2728-2738. doi: 10.1093/cercor/bhq019

Bodner, G. E., & Masson, M. E. J. (2004). Beyond binary judgments: Prime validity modulates masked repetition priming in the naming task. Memory & Cognition, 32(1), 1-11. doi: 10.3758/BF03195815

Bouhamidi, A., & Jbilou, K. (2007). Sylvester Tikhonov-regularization methods in image restoration. Journal of Computational and Applied Mathematics, 206(1), 86–98. doi: 10.1016/j.cam.2006.05.028

Buchsbaum, B. R., Baldo, J., Okada, K., Berman, K. F., Dronkers, N., D’Esposito, M., & Hickok, G. (2011). Conduction aphasia, sensory-motor integration, and phonological short-term memory–an aggregate analysis of lesion and fMRI data. Brain and Language, 119(3), 119-128. doi: 10.1016/j.bandl.2010.12.001

Butorina A.V., Pavlova A.A., Nikolaeva A.Y., Prokofyev A.O., Bondarev D.P., & Stroganova T.A. (2017). Simultaneous processing of noun cue and to-be-produced verb in verb generation task: Electromagnetic evidence. Frontiers in Human Neuroscience, 11, 279. doi: 10.3389/fnhum.2017.00279

Carr, T. H., & Dagenbach, D. (1990). Semantic priming and repetition priming from masked words: Evidence for a center-surround attentional mechanism in perceptual recognition. Journal of Experimental Psychology. Learning, Memory, and Cognition, 16(2), 341-350. doi: 10.1037/0278-7393.16.2.341

Carreiras, M., Armstrong, B. C., Perea, M., & Frost, R. (2014). The what, when, where, and how of visual word recognition. Trends in Cognitive Sciences,18(2), 90-98. doi: 10.1016/j.tics.2013.11.005

Chen, Y., Davis, M. H., Pulvermüller, F., & Hauk, O. (2015). Early visual word processing is flexible: Evidence from spatiotemporal brain dynamics. Journal of Cognitive Neuroscience, 27(9), 1738-1751. doi: 10.1162/jocn_a_00815

Cogan, G. B., Thesen, T., Carlson, C., Doyle, W., Devinsky, O., & Pesaran, B. (2014). Sensory–motor transformations for speech occur bilaterally. Nature, 507(7490), 94-98. doi: 10.1038/nature12935

Cohen, L., Dehaene, S., Naccache, L., Lehericy, S., Dehaene-Lambertz, G., Henaff, M. A., & Michel, F. (2000). The visual word form area: Spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain, 123. doi: 10.1093/brain/123.2.291

Dale, A. M., Liu, A. K., Fischl, B. R., Buckner, R. L., Belliveau, J. W., Lewine, J. D., & Halgren, E. (2000). Dynamic statistical parametric mapping: Combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron, 26(1), 55-67. doi: 10.1016/S0896-6273(00)81138-1

Dehaene, S., & Cohen, L. (2011). The unique role of the visual word form area in reading. Trends in Cognitive Sciences, 15(6), 254–262. doi: 10.1016/j.tics.2011.04.003

Dien, J. (2009). The neurocognitive basis of reading single words as seen through early latency ERPs: A model of converging pathways. Biological Psychology, 80(1), 10–22. doi: 10.1016/j.biopsycho.2008.04.013

Ferrand, L., Brysbaert, M., Keuleers, E., New, B., Bonin, P., Méot, A., … Pallier, C. (2011). Comparing word processing times in naming, lexical decision, and progressive demasking: Evidence from Chronolex. Frontiers in Psychology, 2, 306. doi: 10.3389/fpsyg.2011.00306

Fodor, J. A. (1983). The modularity of mind: An essay on faculty psychology. MIT press.

Forster, K. I., & Davis, C. (1984). Repetition priming and frequency attenuation in lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10(4), 680–698. doi: 10.1037/0278-7393.10.4.680

Grainger, J., & Holcomb, P. (2009). Watching the word go by: On the time-course of component processes in visual word recognition. Language and Linguistics Compass, 3(1), 128–156. doi: 10.1111/j.1749-818x.2008.00121.x

Hämäläinen, M. S., & Ilmoniemi, R. J. (1994). Interpreting magnetic fields of the brain: minimum norm estimates. Medical {&} Biological Engineering {&} Computing, 32(1), 35–42. doi: 10.1007/BF02512476

Hauk, O., & Pulvermüller, F. (2004). Effects of word length and frequency on the human event-related potential. Clinical Neurophysiology, 115(5), 1090–1103. doi: 10.1016/j.clinph.2003.12.020

Hickok, G., & Poeppel, D. (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8(5), 393-402. doi: 10.1038/nrn2113

Hillyard, S. A., & Anllo-Vento, L. (1998). Event-related brain potentials in the study of visual selective attention. Proceedings of the National Academy of Sciences of the United States of America, 95(3), 781-787. doi: 10.1073/pnas.95.3.781

Holcomb, P. J., & Grainger, J. (2006). On the time course of visual word recognition: An event-related potential investigation using masked repetition priming. Journal of Cognitive Neuroscience, 18(10), 1631-1643. doi: 10.1162/jocn.2006.18.10.1631

Holland, P. W., & Welsch, R. E. (1977). Robust regression using iteratively reweighted least-squares. Communications in Statistics - Theory and Methods, 6(9), 813–827. doi: 10.1080/03610927708827533

Huang, M. X., Mosher, J. C., & Leahy, R. M. (1999). A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Physics in Medicine and Biology, 44(2), 423. Retrieved from http://stacks.iop.org/0031-9155/44/i=2/a=010

Kawamoto, A. H., Kello, C. T., Higareda, I., & Vu, J. V. Q. (1999). Parallel processing and initial phoneme criterion in naming words: Evidence from frequency effects on onset and rime duration. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25(2), 362–381. doi: 10.1037/0278-7393.25.2.362

Kilner, J. M., & Friston, K. J. (2010). Topological inference for EEG and MEG. The Annals of Applied Statistics,4(3), 1272–1290. doi: 10.1214/10-AOAS337

Kissler, J., Herbert, C., Winkler, I., & Junghofer, M. (2009). Emotion and attention in visual word processing—An ERP study. Biological Psychology, 80(1), 75–83. doi: 10.1016/j.biopsycho.2008.03.004

Kutas, M., & Federmeier, K.D. (2011). Thirty years and counting: Finding meaning in the N400 component of the event related brain potential (ERP). Annual Review of Psychology, 62, 621. doi: 10.1146/annurev.psych.093008.131123

Liberman, A.M., & Mattingly, I.G. (1985). The motor theory of speech perception revised. Cognition, 21(1), 1-36. doi: 10.1016/0010-0277(85)90021-6

Liberman, A.M., & Whalen, D.H. (2000). On the relation of speech to language. Trends in cognitive sciences, 4(5), 187-196. doi: 10.1016/S1364-6613(00)01471-6

Lyashevskaya, O.N. & Sharov, S.A. (2009)Chastotnyy slovar’sovremennogo russkogo yazyka (na materialakh natsional’nogo korpusa russkogo yazyka).[The frequency dictionary of modern Russian language (based on the Russian National Corpus)]. Moscow: Azbukovnik.

MacLeod, C. M. (1991). Half a century of research on the Stroop effect: An integrative review. Psychological Bulletin, 109(2), 163–203. doi: 10.1037/0033-2909.109.2.163

MacLeod, C. M. (2005). The Stroop Task in cognitive research. In Cognitive methods and their application to clinical research.(pp. 17–40). Washington: American Psychological Association. doi: 10.1037/10870-002

Mahé, G., Zesiger, P., & Laganaro, M. (2015). Beyond the initial 140ms, lexical decision and reading aloud are different tasks: An ERP study with topographic analysis. NeuroImage, 122, 65–72. doi: 10.1016/j.neuroimage.2015.07.080

Marcel, A. J. (1983). Conscious and unconscious perception: An approach to the relations between phenomenal experience and perceptual processes. Cognitive Psychology, 15(2), 238–300. doi: 10.1016/0010-0285(83)90010-5

Martín-Loeches, M. (2007). The gate for reading: Reflections on the recognition potential. Brain Research Reviews, 53(1), 89–97. doi: 10.1016/j.brainresrev.2006.07.001

Maurer, U., Brandeis, D., & McCandliss, B. D. (2005). Fast, visual specialization for reading in English revealed by the topography of the N170 ERP response. Behavioral and Brain Functions, 1(1), 13. doi: 10.1186/1744-9081-1-13

Meister, I. G., Wilson, S. M., Deblieck, C., Wu, A. D., & Iacoboni, M. (2007). The essential role of premotor cortex in speech perception. Current Biology, 17(19), 1692-1696. doi: 10.1016/j.cub.2007.08.064

Möttönen, R., & Watkins, K. E. (2009). Motor representations of articulators contribute to categorical perception of speech sounds. Journal of Neuroscience, 29(31), 9819-9825. doi: 10.1523/JNEUROSCI.6018-08.2009

Neely, J. H. (1991). Semantic priming effects in visual word recognition: A selective review of current findings and theories. Basic Processes in Reading: Visual Word Recognition, 11, 264–336.

Neely, J. H., & Kahan, T. A. (2001). Is semantic activation automatic? A critical re-evaluation. In The nature of remembering: Essays in honor of Robert G. Crowder.(pp. 69–93). American Psychological Association.

Patterson, K., Nestor, P. J., & Rogers, T. T. (2007). Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Review of Neuroscience, 8(12), 976–987. doi: 10.1038/nrn2277

Poeppel, D. (2014). The neuroanatomic and neurophysiological infrastructure for speech and language. Current Opinion in Neurobiology, 28, 142–149. doi: 10.1016/j.conb.2014.07.005

Posner, M. I., & Snyder, C. R. R. (1975). Facilitation and inhibition in the processing of signals. Attention and Performance V, 669–682.

Price, C. J., & Devlin, J. T. (2011). The Interactive Account of ventral occipitotemporal contributions to reading. Trends in Cognitive Sciences, 15(6), 246–253. doi: 10.1016/j.tics.2011.04.001

Pulvermüller, F., Shtyrov, Y., & Hauk, O. (2009). Understanding in an instant: Neurophysiological evidence for mechanistic language circuits in the brain. Brain and Language, 110(2), 81–94. doi: 10.1016/j.bandl.2008.12.001

Ruz, M., & Nobre, A. C. (2008). Attention modulates initial stages of visual word processing. Journal of Cognitive Neuroscience, 20(9), 1727–1736. doi: 10.1162/jocn.2008.20119

Salmelin, R. (2007). Clinical neurophysiology of language: The MEG approach. Clinical Neurophysiology, 118(2), 237–254. doi: 10.1016/j.clinph.2006.07.316

Schilling, H. E. H., Rayner, K., & Chumbley, J. I. (1998). Comparing naming, lexical decision, and eye fixation times: Word frequency effects and individual differences. Memory & Cognition, 26(6), 1270–1281. doi: 10.3758/BF03201199

Strijkers, K., Yum, Y. N., Grainger, J., & Holcomb, P. J. (2011). Early goal-directed top-down influences in the production of speech. Frontiers in Psychology, 2, 371. doi:10.3389/fpsyg.2011.00371

Stroop, J. R. (1935). Studies of interference in serial verbal reactions. Journal of Experimental Psychology, 18(6), 643–662. doi: 10.1037/h0054651

Tadel, F., Baillet, S., Mosher, J. C., Pantazis, D., & Leahy, R. M. (2011). Brainstorm: A user-friendly application for MEG/EEG analysis. Computational Intelligence and Neuroscience, 2011, 8. doi: 10.1155/2011/879716

Taulu, S., Simola, J., & Kajola, M. (2005). Applications of the signal space separation method. IEEE Transactions on Signal Processing. doi: 10.1109/TSP.2005.853302

Velichkovsky, B.M. (2006). Cognitivnaya nauka: Osnovy psikhologii poznaniya[Cognitive science: Foundations of epistemic psychology]. (Vol. 1). Moscow: Smysl / Academia.

Velichkovsky, B. M., Krotkova, O. A., Sharaev, M. G., & Ushakov, V. L. (2017). In search of the “I”: Neuropsychology of lateralized thinking meets Dynamic Causal Modeling. Psychology in Russia: State of the Art, 10(3), 7-27. doi: 10.11621/pir.2017.0301

Yap, M. J., Pexman, P. M., Wellsby, M., Hargreaves, I. S., & Huff, M. J. (2012). An abundance of riches: Cross-task comparisons of semantic richness effects in visual word recognition. Frontiers in Human Neuroscience, 6, 72. doi: 10.3389/fnhum.2012.00072

To cite this article: Pavlova A. A., Butorina A. V., Nikolaeva A. Y., Prokofyev A. O., Ulanov M. A., Stroganova T. A.(2017). Not all reading is alike: Task modulation of magnetic evoked response to visual word. Psychology in Russia: State of the Art, 10 (3), 190-205

The journal content is licensed with CC BY-NC “Attribution-NonCommercial” Creative Commons license.