Evidence–Based Practice for Psychologists in Education: A Comparative Study from the Czech Republic, Slovakia, and Slovenia

Abstract

Background. In recent decades, discussionhas been increasingabout the guidelines for psychological interventions, evidence-based interventions (EBI), and evidence-based practice (EBP). These efforts have a longer tradition in medicine and psychiatry,but are increasingly present in the practice of school psychology. The creation, use, and implementation of EBP procedures protects psychologists from intuitive and non-scientific procedures that can harm clients, psychology, and its development.

Objective. The focus of this article is the EBP of school psychologists in the Czech Republic, Slovakia, and Slovenia. We researched to what degree psychologists implement EBP in their work in educational institutions, in which domains they most effectively apply EBP, and what the obstacles and needs are regarding EBP in school psychology.

Design. Two hundred and two school psychologists answered a questionnaireabout their application of EBP. The questionnaire contains categories about the sourcesof EBP, its availability,and the extent to which respondents apply EBP in specific domains of their work.

Results. The data show a low practical significance of differences among respondents from the three countries. Respondents reported the highest values for the reliance of their work on professional cooperation, useof EBP principles in specific domains, and use ofprofessional guidelines.The Pearson correlation indicates positive association among all substantial categories.

Conclusion. The preliminary results show that school psychologists are aware of the importance of applying EBP in practice, and highlight some of the obstacles that prevent them from cultivating psychological science in the interest of education.

Received: 13.08.2019

Accepted: 01.11.2019

Themes: Educational psychology

PDF: http://psychologyinrussia.com/volumes/pdf/2019_4/Psychology_4_2019_79-100_Juriševič.pdf

Pages: 79-100

DOI: 10.11621/pir.2019.0405

Keywords: school psychology, applied psychology, evidence-based practice, quality assurance, psychological science

Introduction

Psychological assistance to clients – students, parents, and colleagues – does not usually follow strict and pre-defined procedures and instructions. Instead, it requires creativity, experience, and intuition. Various psychological interventions are grounded in tradition and one’s own beliefs or in subjective theories; a psychologist does not always get clear feedback on the effects of an intervention. Lilienfeld, Ammirati, and David (2012) speak about the risk of “naive realism”: A psychologist knows that an intervention was helpful to the client but does not know why. In fact, the interventions are usually influenced by a variety of other circumstances.

People involved in helping professions, which in our case are school psychologists [1], therefore ask numerous questions, such as what caused the effectiveness of my interventions, how to differentiate professional from intuitive procedures, and what scientific vs. non-scientific practice is. A common component of practice is “working uncertainty”, which the psychologist tries to eliminate by a variety of measures and procedures.

Although intuition is considered a standard component of psychological practice, a scientific approach to procedures should not be neglected. A debate about the deep gap between science and practice has been in progress for some time; surveys are being conducted focusing on what the psychologists actually rely on in their practice and whether they know what the effectiveness of their interventions is based on. In recent decades, discussion has been increasing about the guidelines for psychological interventions, evidence-based interventions (EBI), and evidence-based practice (EBP). These efforts have a longer tradition in medicine and psychiatry, but are increasingly present also in the practice of school psychology. The creation, use, and implementation of EBP procedures protects psychologists from intuitive and non-scientific procedures that can harm clients, psychology, and its development.

The main goal of this article is to present an empirical probe into the profession of school psychologists in three European countries: the Czech Republic, Slovakia, and Slovenia. We are studying whether psychologists use scientific findings and evidence about work effectiveness, in which domains they apply EBP, and what limitations and needs they encounter.

Definition of Evidence-Based Practice (EBP), Its History and Principles

EBP is generally referred to as an integration of the best research in clinical expertise and the client’s preferences for treatment. Hoagwood and Johnson(2003) use the following definition:

Evidence based practice refers to a body of scientific knowledge, defined usually by reference to research methods or designs, about a range of service practices. EBP is a shorthand term denoting the quality, robustness, or validity of scientific evidence as it is brought to bear on these issues. (p. 5)

Evidence-based practice is now an important feature of health-care systems and policy. The beginnings of EBP-based reasoning and approaches are often connected with Cochrane’s (1972) argument for rigorous empirical verification of medical interventions in order to maximize the impact of health-care expenditures (as cited in Sackett, Rosenberg, Gray, Haynes, & Richardson, 1996). Interest in EBP-based health-care practice was boosted by the Institute of Medicine (2001), which defined EBP as “the integration of best research evidence with clinical expertise and patient values”(p. 147).

Today, EBP, and the creation and use of practical guidelines, are applied to a variety of medical and other disciplines such as nursing (Correa-de-Araujo, 2016; Mason, Leavitt, & Chafee, 2002), mental health (Geddes, 2000), occupational therapy (Bennett & Bennett, 2000), and physical therapy (Maher et al., 2004). These branches strive to standardize health-care practices with the latest and best scientific findings in order to minimize variations in care and avoid unanticipated health outcomes (Correa-de-Araujo, 2016). Moreover, EBP has been extended to other disciplines such as social work (Cournoyer & Powers, 2002; Okpych & L-H Yu, 2014; Patterson, Dulmus, & Maguin, 2012), human research management (Briner, 2000), and education (Thomas & Pring, 2004).

Mostly due to the American Psychological Association’s report (2006), EBP received support and general interest from psychologists of various specializations. The association defined evidence-based practice in psychology (EBPP) as:

The integration of the best available research with clinical expertise in the context of patient characteristics, culture, and preferences. The purpose of EBPP is to promote effective psychological practice and enhance public health by applying empirically supported principles of psychological assessment, case formulation, therapeutic relationship, and intervention. (p. 180)

Cournoyer and Powers (2002) stated that, whenever possible, psychological practice should be based on:

Prior findings that demonstrate empirically that certain actions performed with a particular type of client or client system are likely to produce predictable, beneficial and effective results. Every client system should be individually evaluated to determine the extent to which the predicted results have been attained as a direct consequence of the practitioner’s actions. (p. 799)

Experimental methodologies are typical for this approach (White & Kratochwill, 2005).

Besides the term of EBP, psychology and other fields use other related terms that are sometimes not clearly distinguished from each other. The American Psychological Association (APA) declared that it is important to clarify the relation between EBPP and empirically supported treatments (ESTs). Following the APA’s (2006) consideration of the EBPP, this approach is regarded as more comprehensive:

ESTs start with a treatment and ask whether it works for a certain disorder or problem under specified circumstances. EBPP starts with the patient and asks what research evidence will assist the psychologist in achieving the best outcome. In addition, ESTs are specific psychological treatments that have proved to be efficacious in controlled clinical trials, whereas EBPP encompasses a broader range of clinical activities (e.g., psychological assessment, case formulation, therapy relationships). As such, EBPP articulates a decision-making process for integrating multiple streams of research evidence into the intervention process. (p. 273)

In a similar way, White and Kratochwill (2005) mentioned aspects of EBP (or EBPP), such as “empirically validated treatment/interventions” (EVT) and “evidence based intervention(EBI)” (p. 100). The authors stated that the former is used rather seldom (treatment validated by experimental research) and is often substituted by EBI, which “refers to an intervention that meets the criteria of a task force for support on a wide range of methodological and statistical features” (White & Kratochwill, 2005, p. 100).

Shaywitz (2014) provided a simple explanation of the difference between the terms evidence-based andresearch-based interventions.As she explained, research-based means that there are theories behind the approach, but they have not always been proven. Evidence-basedmeans that there is also efficacy to back it up. Therefore, the term EBP is understood more broadly:

It designates the application of a psychological intervention that has previously been documented to have empirical support and be designated as an EBI. EBP involves evaluation of an intervention in practical context in order to determine if the intervention is effective. (White & Kratochwill, 2005, p. 100)

On the one hand, the idea is to use verified and valid theoretical and practical sources (theories, surveys, and guidelines), while on the other, there must be continuous verification or checking that these procedures are effective and beneficial for the client.

The key topic of the present article is the use of EBP in school-psychological practice. School psychologists provide services for various clients (usually students, parents, and teachers) and use various types of interventions in order to achieve different objectives (precautionary procedures, investigation, reeducation, psychotherapy, diagnostics, consulting, etc.). In school psychology, the importance of EBI/EBP was first recognized at the turn of the millennium alongside the creation of Task Force on Evidence-Based Interventions in School Psychology, supported by the APA (Division 16 – School Psychology) and the Society for the Study of School Psychology. The main task of these actors was to support EBP, for example, by writing a manual for EBI creation and verification (American Psychological Association, 2006; Liu & Oakland, 2016; Shernoff, Bearman, & Kratochwill, 2017).

In recent years, there has been a shift from the clinical model of school psychology to the social model, stressing a healthy and inclusive climate in schools (Farrell, 2004). A movement towards more comprehensive mental health promotion and intervention in schools is apparent at the global level. Furthermore, it gives an advantage to schools and mental health systems(Schaeffer et al., 2005).

It is not only school psychologists who can take preventive measures against psychological and mental distress and disadvantage, and can support systemic and organizational change aimed at better health of individuals, families, and communities (APA, 2014). Nonetheless, as Shernoff et al. (2017) stated, school psychologists are uniquely positioned to support the delivery of evidence-based mental health practices in order to address the mental health needs of children and youth. Therefore, their role includes operating as mental health experts within schools and supporting the delivery of comprehensive mental health services across multi-tier systems of support.

In order to take precautions and support a healthy school climate, various programs are created and used in school psychology practice (McKevitt, 2012). Verification of the effectiveness of these programs is one of the key characteristics of EBP. White and Kratochwill (2005) explained that both EBI and EBP include professional decision-making about client care and intervention. They summarized four sources for EBI in practice: (a) research literature on basic intervention as published in professional journals, (b) consensus or expert panel recommendations, (c) reviews of single interventions or programs undertaken by professional groups or other bodies, and (d) literature reviews and synthesis documents.

Besides the integration of best research findings in psychological practice, attention is given to the validity of psychological testing and assessment, clinical expertise, locating and evaluating research, critical thinking, communication of assessment findings and implications, and so forth. But the application of EBP in psychology must also take into account the characteristics of clients, their culture, and their preferences. The use of EBP and related guidelines does not mean that client examination and care cease to be individualized and client-targeted. The application of EBP is therefore an individualized and dynamic process (American Psychological Association, 2006; Bornstein, 2017).

When constructing our questionnaire (see section on Research Methodology), we were relying on the EBI definitions mentioned above. As for the EBP sources, we worked with empirical research or school-based empirical research and evaluation, expert consulting and supervision, scientific findings, and practical guidelines. Particular sections in the questionnaire reflect the categories of these sources.

Support for EBP Implementation

The use of scientific findings, guidelines, and manuals in practice is certainly useful for bridging the gap between theory and practice, and it plays an important role in the support of early-career professionals (Schaeffer et al., 2005). Nevertheless, their implementation faces diverse challenges. EBP implementation probably has no opponents, strictly speaking, but some authors have identified implementation risks and limitations and have suggested different options for support (White & Kratochwill, 2005). Obstacles in EBP implementation can be divided into individual and organizational ones (Black, Balneaves, Garossino, Puyat, & Qian, 2014), even if the sources of these obstacles cannot always be clearly differentiated.

Individual obstacles usually concern incorrect understanding of EBP. Evidence-based interventions and the use of guidelines and manuals are sometimes considered by some practitioners as “cookbooks”. Practitioners may oppose the idea of replacing professional assessment and decision-making with instructions and manuals. Their argument is that the use of EBI ignores the objectives, needs, and values of clients (Cook, Schwartz, & Kaslow, 2017). Other limitations include practitioners’ lack of knowledge and skills to conduct surveys and their lack of awareness of relevant research and guidelines. At the same time, good guidelines are not always available (their availability differs by country; the best availability will probably be in countries where practitioners are able to read sources in English). Therefore, unavailability and, at times, disputed quality of sources can also be considered as an obstacle. High-quality, well-elaborated, and copyrighted sources may also be too expensive for psychologists or schools (Schaeffer et al., 2005). Moreover, even the best tools have to be tested and verified in the specific context in which the psychologist works, which is a time-consuming process. Several authors have mentioned the lack of timeto implement change, explore, and put new ideas and procedures into practice, as another limitation (see Black et al., 2014). As typical organizational obstacles, practitioners mentioned a rigid organizational culture and lack of support from colleagues, supervisors, other specialists, and leaders.

In recent years, numerous authors have dealt with the identified risks and have proposed various options of support for EPB implementation, and not only for school psychology. Attention is given to training of psychologists for EBP implementation, support from leaders of organizations, and change of organizational culture in favor of EBP. In fact, initiatives and efforts of individuals may be insufficient; successful implementation is hardly possible without external support from both organizations and policymakers (professional associations and communities, political institutions, donors, etc.).

Schaeffer et al. (2005) are convinced that EBP implementation works only if the actors arecommitted to and confident inEBP; therefore, it is important that the development is bottom-up and that the organizational culture is considered. People must be aware of the objectives and the meaning of EBP implementation and should know that they can rely on supportive leaders, whether in terms of finance, training, or feedback. Furthermore, mentoring programs are an effective way to implement EBP; they provide continuous support to practicing school psychologists (Black et al., 2014).

EBP implementation should focus on educationof practitioners, both pre-service university programs and in-service, in the framework of continuous professional development (CPD) courses. One of the related topics is the scientist-practitioner model of graduate education in psychology (see Black et al., 2014; Hayes, Barlow, & Nelson-Gray, 1999). Different countries pay different attention to EBP and EBI education of school psychologists. Professional literature mainly focuses on training programs in the US, which are supported by professional organizations (e.g., APA Division 16, the Society for the Study of School Psychology, and NASP). In other countries, articles on school psychologists’ EBP education are available at national levels, although graduate training in EBP is considered crucial in ensuring that the next generation of practicing school psychologists enters schools with knowledge, skills, and experience for the implementation of effective practices (Shernoff et al., 2017).

It has been mentioned that transnational researchand research at the workplace must be strengthened in order to create and verify new procedures. Attention must be given to methodological aspects of creation and verification of new procedures (Correa-de-Araujo, 2016). The need for research is mentioned by Kratochwill and Shernoff (2004) in their proposed strategy to promote EBIs for school psychologists. They suggest a development of practice-research networks in school psychology and an expanded methodology for evidence-based practices that takes into account the practical context of EBI. They also propose guidelines that school psychology practitioners can use in implementing and evaluating EBIs in practice, in creating professional development opportunities for practitioners, researchers, and trainers, and in forging partnerships with other professional groups involved in the EBI agenda.

Support for EBP and EBI implementation in specific countries, however, requires an analysis of the existing state regarding both procedures. For this reason, we conducted a survey that would show us how school psychologists in the countries involved in this study use sources typical for EBP.

The Present Study

Researching EBP in the psychology of education, the topic of this paper, originally appeared in the framework of an international group of psychologists in education, organized as the Standing Committee of Psychology in Education at the European Federation of Psychologists’ Associations (EFPA). Discussions about the future of European psychology in education led to the conclusions that the practice of school psychologists in different EU countries should be first clearly understood, and that a plan of appropriate international and national activities to develop and enhance the domain should be established on the basis of these findings.

It was meetings within the SC EFPA Psychology in Education that prompted us to carry out a survey that interconnected three Slavic countries: the Czech Republic, Slovakia, and Slovenia. These are Central European countries, rather small, with certain similarities in their history, culture, as well as economic development. The GDP per capita in PPS-Index (Purchasing Power Standards) is 90 for the Czech Republic, 87 for Slovenia, and 78 for Slovakia (GDP, 2018); the total general government expenditure on education is 4.6 % of GDP in the Czech Republic, 3.8 % in Slovakia (provisional), and 5.4 % in Slovenia (total, 2017). Also, the way in which educational psychologists are employed is very much the same; we have mainly addressed those who work directly in schools and therefore can be called school psychologists. The Czech Republic and Slovakia even share a common tradition as to the origins of school psychology. This discipline began to develop only in the post-revolutionary 1990s when the two countries split, but close cooperation continued and still continues. In Slovenia, educational psychology, or school psychology, has had a longer tradition. In all three countries, school psychologists work directly in schools and collaborate more or less closely with institutions providing services for schools. In the Czech Republic and Slovakia, school psychologists (who work directly in schools) and consultant psychologists (who work in consultancy centers), are strictly distinguished from each other. In Slovenia, there are psychologists who work both in schools, in the framework of school counselling services, as well as in counselling centers for schools, which are regionally located (Eurydice, 2019; Gregorčič Mrvar & Mažgon, 2017). For this reason, the Slovenian sample is somewhat different, so we use both terms in our article: school psychology and educational psychology.

For the Czech Republic and Slovakia, it is typical that financing for psychologists in education is project-related, so there are one-off job commitments in these countries, while Slovenia has more transparent financing and more stable jobs in this respect.

In the present study, the authors researched how school psychologists apply EBP. The main aim of the research is to analyze EBP in school psychologists’ practical work. Therefore, three basic research questions were defined: (a) How do school psychologists apply EBP in their everyday practice in the three countries? (b) In which domains of their work do they apply EBP most effectively? (c) What are the perceived obstacles to and needs for the EBP application?

Method

Participants

The participants were 202 psychologists from three European countries: the Czech Republic (41%), Slovakia (32%), and Slovenia (27%). They were recruited to participate in the study by the authors as being close at hand(convenient sampling). They were predominantly female (93%), aged between 31 and 40 years (36%), with approximately 5 years of practical experience in education (48%). Table 1 and Table 2 summarize the demographic data by country.

Table 1

Sample description – participants’ sex, age, and years of professional experience

|

|

Czech (n = 82) |

Slovakia (n = 65) |

Slovenia (n = 55) |

|

Sex (%) |

|||

|

Female |

88.3 |

95.4 |

97.9 |

|

Age (%) |

|||

|

25 years or less |

7.8 |

7.7 |

0 |

|

26–30 years |

20.8 |

35.4 |

8.3 |

|

31–40 years |

37.7 |

44.6 |

29.2 |

|

41–50 years |

18.2 |

12.3 |

31.3 |

|

50 years or more |

15.6 |

0 |

31.3 |

|

Years of professional experience in education (%) |

|||

|

5 years or less |

53.2 |

72.3 |

8.3 |

|

6–10 years |

35.1 |

23.1 |

18.8 |

|

11–20 years |

7.8 |

4.6 |

31.3 |

|

21 years or more |

3.9 |

0 |

41.7 |

Table 2

Sample description – participants’ workplace

|

|

Czech (n = 82) |

Slovakia (n = 65) |

Slovenia (n = 55) |

|

|

|

Type of school (%) |

|

||

|

Elementary school |

69 |

69.2 |

52 |

|

|

Upper secondary school |

15 |

20.0 |

33 |

|

|

Combined - more types of schools |

12 |

10.8 |

2 |

|

|

Other |

4 |

0 |

13 |

|

Note. Other = educational programs for students with special needs; educational programs for adults; educational centers.

Materials

For the purpose of this study, a new five-part questionnaire (EBP-PiE) for measuring the use of EBP in psychology in education was constructed. It contains 22 items with statements about EBP, which we classified in seven categories on the basis of contemporary empirical and theoretical findings as well as our expertise.

The first part of the questionnaire was based on White & Kratochwill’s (2005) conclusions referring to (a) research literature, (b) consensus or expert panel recommendations, (c) reviews of single interventions or programs, and (d) literature reviews and synthesis documents. With reference to this concept of EBP, the first part of the questionnaire contains five categories related to the sources school psychologists rely on and the extent to which they apply EBP. These categories are: (1) research findings and literaturereview (items 1, 8, 15); (2) professionalguidelines at local or national level (items 2, 9, 16); (3) workplace-based empirical research (items: 3, 10, 17); (4) cooperationwith professionals and peer review (items 4, 11, 18); and (5) evaluationof the efficacy of interventions (items 5, 12, 19). We were interested in both research-based practice, i.e., primary support from scientific findings of most recent research (categories 1, 2 and 3) and evidence-based verification of practice (categories 4 and 5).

The second part of the questionnaire contains items about the availabilityof these sources (the sixth category, entitled availabilityof sources, with items 6, 13, 20); therefore, it deals with support for EBP use as commented on above.

We also wanted to identify the work domains in which the psychologists use most of the EBP principles. Part three of the questionnaire was therefore focused on the identification of the extent to which EBP principles are used by psychologists in specific domains (the seventh category, entitled useof EBP principles, with items 7, 14, 21, 22). These domains were defined according to legislation of the Czech Republic, outlining the work domains of school psychologists as prevention, consulting, reeducation and therapeutic interventions, diagnostic procedures, and provision of methodological support for teachers (Regulation, 2005). These domains of the work of school psychologists, or educational psychologists, are identified in such a general manner that they are transferable to other contexts. We assumed that these domains would be more or less the same in the other two countries (4 items: 7, 14, 21, 22). Responses are on a 6-point Likert scale (1 = Strongly Disagree, 2 = Disagree, 3 = Slightly Disagree, 4 = Slightly Agree, 5 = Agree, 6 = Strongly Agree), with higher scores indicating stronger endorsement of the statement. Three items were negatively worded (items 15, 16, and 17). The 22 items’ reliability based on internal consistency for all three samples was satisfactory: αSlovenia= .85; αCzech= .86; and αSlovakia= .78. The fourth part of the questionnaire contains three open-ended questions about the perceived limitations and needs in the implementation of EBP.

The last part of the questionnaire consists of a self-report on four demographic variables: age, sex, years of professional experience, and workplace.

In line with the recommendation of Tabachnick & Fidell (2007) about the requirements of performing exploratory factor analysis (i.e., one large sample at the same point in time), this statistical procedure was not performed on the three small samples of this study. Therefore, we employed the Pearson correlation coefficient to assess the construct validity (Table 4 in the Appendix), indicating positive association among seven substantial categories (.29 ≤ r≤.64). The majority of values showed statistically significant relationships as theoretically expected. All three subsamples showed statistically significant and moderate strength of correlation between the categories evaluationand use(rtotal= .55, p≤ .01), literatureand use(rtotal= .51, p≤ .01), and availabilityand use(rtotal= .50, p≤ .01) (Hempfill, 2003). There was no relationship found between literatureandcooperationand between workplace and cooperation(rtotal= .07, p= .30; and rtotal= .06, p= .43, respectively). Overall, the results suggest that the categories are substantially related, but still meaningfully different because the relative share of the variance for particular variables was not explained (unpredicted) by the given relationships.

Procedure

Two methods of data collection were used for questionnaire EBP-PiE. The questionnaire was prepared first in English as the lingua francaof the authors and was subsequently translated into Slovenian, Czech, and Slovak. Web-based administration was prepared using the 1CS, the Slovenian open source application for online surveys, for Slovenian and Czech respondents, and paper-and-pen administration for the Slovak respondents. This decision was based on our experience with surveys in the national contexts and was supported by the assumption that it will not have a significant impact on the results of this study (Brug, Campbell, & van Assema, 1999; Ebert, Huibers, Christensen, & Christensen, 2018).

All data were collected over a 5-week period from April to May 2019. In the Czech Republic, the questionnaires were distributed in association with the directory of the Association of School Psychology and the directory of the National Institute for Education, which coordinates projects for financing and support of school consultants. A Facebook page for school psychologists, Školní psychologové, was also used. We received 82 completed questionnaires, which corresponds to approximately 16% of the school psychologists in the Czech Republic. In Slovakia, the questionnaires were distributed to school psychologists with the help of the Slovak School Psychology Association and by the Facebook club of school psychologists. We received 65 completed questionnaires, which corresponds to approximately 20% of the school psychologists in Slovakia. In Slovenia, the questionnaires were distributed via the mailing list of the Division of Psychologists in Education of the Slovenian Psychologists’ Association. We received 55 completed questionnaires, which corresponds to approximately 20% of the school psychologists in Slovenia.

Quantitativedata was analyzed using the statistical program IBM SPSS Statistics 20 (IBM Corporation, 2016). The analysis was limited to descriptive and inferential statistics due to the small samples in each country. The results were supported by a qualitative analysis of the answers to open questions in order to get insight into the “lived experience” of the respondents (Silverman, 2000; Strauss & Corbin, 1999).

Results and Discussion

The main focus of the present study was to gain insight into how psychologists in the European context (three different countries) apply EBP in their everyday practice in schools. On the one hand, EBP is considered the highest standard of care (Hamill & Wiener, 2018), and on the other, we observed a lack of this information at the national levels as well as internationally. This is in spite of the fact that understanding the quality of professional work of psychologists in education is crucial for evaluation, enhancement, and development of psychology in the public interest. The results of this study are preliminary descriptive findings, based on the data collected on small samples of respondents from three countries. In the future we would expect this research to develop progressively and involve different European national contexts.

EBP in School Psychologists’ Work

Table 3 reportsthe means and standard deviations for scores on the EBP-PiE items, as well as coefficients of skewness and kurtosis.

Table 3

Descriptive statistics for 22 items of the EBP-PiE by country

|

|

Country |

M |

SD |

Skewness |

Kurtosis |

|

1. My professional decisions and procedures are based on new theories that I have learned about from professional books and journals, and at conferences. |

Czech |

4.15 |

1.02 |

-.59 |

1.11 |

|

Slovakia |

4.23 |

1.18 |

-.41 |

-.25 |

|

|

Slovenia |

4.71 |

0.74 |

-.34 |

.09 |

|

|

2. My professional work is based on practical professional guidelines, e.g., assessment tools, intervention steps. |

Czech |

4.48 |

0.86 |

-.86 |

1.01 |

|

Slovakia |

4.32 |

1.12 |

-.88 |

.56 |

|

|

Slovenia |

4.78 |

0.88 |

-1.27 |

2.51 |

|

|

3. My professional work is based on the results of my own workplace research (surveys, interviews, experiments, etc.). |

Czech |

4.07 |

1.21 |

-.40 |

-.55 |

|

Slovakia |

3.63 |

1.29 |

-.74 |

-.58 |

|

|

Slovenia |

4.31 |

1.07 |

-.75 |

-.04 |

|

|

4. I discuss my individual cases with my colleagues and other experts at my workplace. |

Czech |

4.82 |

1.07 |

-.50 |

-.72 |

|

Slovakia |

4.69 |

1.42 |

-1.07 |

.38 |

|

|

Slovenia |

5.20 |

1.01 |

-1.55 |

2.40 |

|

|

5. I regularly evaluate the effectiveness of my practice. |

Czech |

4.27 |

1.05 |

-.24 |

-.32 |

|

Slovakia |

4.14 |

1.37 |

-.60 |

-.42 |

|

|

Slovenia |

4.51 |

1.02 |

-.52 |

.35 |

|

|

6. In the case of work uncertainty, I get sufficient professional support from colleagues or others. |

Czech |

3.99 |

1.31 |

-.42 |

-.28 |

|

Slovakia |

3.92 |

1.70 |

-.33 |

-1.18 |

|

|

Slovenia |

4.65 |

1.04 |

-.89 |

.61 |

|

|

7. My preventive work is based on evidence (guidelines, literature, research results). |

Czech |

4.18 |

1.00 |

-.69 |

.27 |

|

Slovakia |

4.14 |

1.07 |

-.13 |

-.67 |

|

|

Slovenia |

4.76 |

0.72 |

.08 |

-.47 |

|

|

8. My professional decisions and procedures are based on new research results that I have learned about from professional books and journals, and from experts at conferences. |

Czech |

4.01 |

1.09 |

-.26 |

24 |

|

Slovakia |

3.75 |

1.56 |

.01 |

-.54 |

|

|

Slovenia |

4.43 |

1.13 |

-.94 |

1.66 |

|

|

9. My professional work is based on analysis of my previous professional work (evaluation, reflection). |

Czech |

4.77 |

0.91 |

-.84 |

.96 |

|

Slovakia |

4.91 |

0.90 |

-.89 |

.96 |

|

|

Slovenia |

5.00 |

.84 |

-.98 |

1.92 |

|

|

10. My professional work is based on recognized methodology and instruments. |

Czech |

4.32 |

1.20 |

-.77 |

.57 |

|

Slovakia |

3.72 |

1.40 |

-.26 |

-.67 |

|

|

Slovenia |

4.85 |

1.04 |

-1.02 |

1.11 |

|

|

11. I consult external experts about my practice and individual cases. |

Czech |

4.49 |

1.10 |

-.34 |

-.45 |

|

Slovakia |

4.52 |

1.32 |

-.93 |

.29 |

|

|

Slovenia |

4.85 |

0.80 |

.05 |

-.97 |

|

|

12. I use validated evaluation tools to evaluate my own work. |

Czech |

3.61 |

1.38 |

.07 |

-.77 |

|

Slovakia |

3.23 |

1.42 |

.22 |

-.85 |

|

|

Slovenia |

3.96 |

1.25 |

-.82 |

.62 |

|

|

13. I have sufficient access to new theoretical and empirical findings in psychology. |

Czech |

3.84 |

1.17 |

-.16 |

-.82 |

|

Slovakia |

3.68 |

1.37 |

.12 |

-1.08 |

|

|

Slovenia |

4.18 |

1.17 |

-.30 |

-.60 |

|

|

14. My interventions are based on validated evidence (guidelines, literature, research results). |

Czech |

4.40 |

0.90 |

-.69 |

.86 |

|

Slovakia |

4.48 |

0.92 |

-.12 |

-.22 |

|

|

Slovenia |

4.76 |

0.72 |

.72 |

.02 |

|

|

15. My professional decisions and procedures are based on my own intuition and the personal experience that I get from my practice. |

Czech |

2.13 |

0.76 |

.44 |

.11 |

|

Slovakia |

2.08 |

0.96 |

1.50 |

4.14 |

|

|

Slovenia |

2.24 |

0.94 |

.88 |

1.20 |

|

|

16. My professional work is based on my intuition and experience without special guidelines. |

Czech |

3.70 |

1.18 |

-.34 |

-.41 |

|

Slovakia |

4.06 |

1.44 |

-.43 |

-.86 |

|

|

Slovenia |

3.98 |

1.13 |

-.20 |

-.94 |

|

|

17. My professional work is not based on documentation and analyses of my interventions. |

Czech |

4.08 |

1.27 |

-.91 |

.37 |

|

Slovakia |

4.20 |

1.51 |

-.49 |

-.88 |

|

|

Slovenia |

3.85 |

1.38 |

-.08 |

-1.05 |

|

|

18. I look for regular supervision and other forms of reflection about my practice. |

Czech |

4.29 |

1.28 |

-.25 |

-1.05 |

|

Slovakia |

4.68 |

0.97 |

-.15 |

-.94 |

|

|

Slovenia |

3.96 |

1.36 |

-.07 |

-.92 |

|

|

19. I ask for feedback about my work from pupils, teachers, parents, or others. |

Czech |

4.54 |

1.07 |

-.60 |

.08 |

|

Slovakia |

4.58 |

1.28 |

-.76 |

-.01 |

|

|

Slovenia |

4.56 |

1.10 |

-.51 |

-.18 |

|

|

20. I have sufficient access to practical professional guidelines and instruments. |

Czech |

3.67 |

1.30 |

.01 |

-.80 |

|

Slovakia |

3.02 |

1.32 |

.18 |

-.67 |

|

|

Slovenia |

4.09 |

1.16 |

-.33 |

-.62 |

|

|

21. Assessments I do in my school are based on validated evidence (guidelines, literature, research results). |

Czech |

4.51 |

1.06 |

-.52 |

-.15 |

|

Slovakia |

4.46 |

1.09 |

-.79 |

.92 |

|

|

Slovenia |

4.64 |

0.9 |

-.63 |

.36 |

|

|

22. Methodical support that I provide to teachers in my school is based on validated evidence (guidelines, literature, research results). |

Czech |

4.44 |

0.98 |

-.59 |

.90 |

|

Slovakia |

4.37 |

1.08 |

-.56 |

.48 |

|

|

Slovenia |

4.65 |

0.89 |

-.41 |

.36 |

As can be observed, means ranged from 2.08 to 5.00, with means on the positive items (for example, item 9 on the value of self-evaluation and reflection) higher than means on the negative items (for example, item 15 on the value of personal intuition and experience), in keeping with the literature. Most of the scores were not substantially skewed or kurtotic, implying normal distribution of the data; the only exception was item 15 for the Slovak respondents and items 2 and 4 for the Slovenian respondents. On these items, respondents’ answers were unexpectedly high, probably due to the extent of their professional experience and workplace conditions (see further discussion, below). Figure 1 shows the items grouped into seven substantial categories.

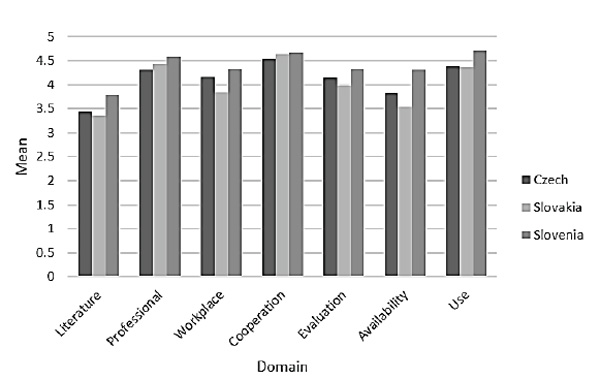

Figure 1. EBP-PiE domains by country

On average, the calculated eta squared implies low practical significance of differences among respondents from the three countries (0.005 ≤ η²≤ 0.08) (Lakens, 2013). Respondents reported the highest values for the reliance of their work on professional cooperation(Mtotal= 4.60; η²= 0.005), which means that they repeatedly reflect on their practice and consult with other professionals on different professional issues. The second highly represented category was the useof EBP principles (Mtotal= 4.46; η²= 0.04), which explains the relatively consistent everyday use of validated evidence such as guidelines, literature, and research results in the domains of psychological assessments, prevention, intervention, as well as methodological support provided to teachers. Based on respondents’ own professional experience (Mtotal= 4.42; η²= 0.03), the use of professionalguidelines seems to be equally important. On the other hand, professional literaturethat would follow new theoretical and empirical findings as a source of EBP, and the availability of literature, professional findings, and professional support were represented with relatively low values and show a practical significance of medium size between respondents from different countries, in contrast with our initial expectation (Mtotal_literature= 3.50; η²literature= 0.06, and Mtotal_availability= 3.87; η²availability= 0.08). Figure 1 shows that Slovenian school psychologists reported the highest average values on both categories and that the Slovak psychologists reported the lowest values; this could be connected with our hypothesis about country context and extent of professional experience (see the description of the sample in the section on Method). Nevertheless, the categories of workplace-based empirical research and the evaluation of the effectiveness of interventions show average representations among all three subsamples (Mtotal_workplace= 4.11; η²workplace= 0.06, and Mtotal_evaluation= 4.14; η²evaluation= 0.02). This result is in contradiction with Hamill & Weiner’s (2018) finding that psychologists with more years of practice had more negative attitudes towards EBP in comparison with their younger colleagues. Those authors did underline, however, that the empirical results from different studies are still inconclusive and that further research is needed to confirm the impact of individual differences such as years of experience on attitudes towards EBP as well as on the implementation of EBP in (school) psychologists’ practice.

Barriers and Needs Perceived in EBP Application

Answers to the open questions in the last part of the questionnaire did not differ greatly among countries, but rather they complemented each other. We categorized answers according to the similarities in meaning by using open coding technique (Blair, 2015; Strauss & Corbin, 1999). Qualitative analysis resulted in eight categories of expressed obstacles and needs:

Time: It is most important for school psychologists to work with clients, so they very often have negative comments about the administrative workload. In recent years, this has mainly been the documentation of pupils with special educational needs (particularly typical for the Czech Republic, where a new system of supportive measures for these pupils was implemented a short time ago), GDPR documents, project applications, and so on. In the Czech Republic and Slovakia, school psychologists often work part-time and at multiple schools. This limits their capacity to work with varied theories and surveys, because they mainly want to devote their time in the school, short as it is, to their clients.

Work overload: Respondents in all three countries referred to the workload that the nature of their profession generates. Their job is diverse and dynamic, and they feel they play the role of “servants” or even “supermen”. They feel they do not have enough time and energy for EBP application.

Support and appreciation:School psychologists reported that they often lack feedback and, in particular, appreciation, either from parents or colleagues. They would also welcome appreciation from school leaders and consultants in the schools. In this respect, there is a certain difference between school psychologists, who work in schools only, and educational psychologists, who spend a considerable portion of their time (if not all of it) in regional advisory centers for schools. This system of support for schools is similar in all three countries, but there are no data available as to how many of our respondent psychologists work in schools only and how many of them also cooperate closely with regional advisory centers.

Education andcontinual professional development: School psychologistsreported that they lack a system of accessible and high-quality in-service education, conferences aimed at practice, seminars, and local networks. They think that undergraduate education is of a too general nature. Czech psychologists appreciated the social networks of school psychologists, but said they lacked specialized training programs in school psychology, as there is only a general bachelor’s and master’s education in psychology.

Material and information:The respondents would appreciate high-quality and dynamic websites, comprehensive methods and guidelines, high-quality books, nationwide research or data from foreign research applied in various cultures, examples of good practice and models, professional journals, inexpensive tools, and other resources. Such requirements appeared in the questionnaires from all three countries. These countries are small; there are not many high-quality resources in their national languages available, and the psychologists are not always able or do not always want to study sources written in English.

Supervision: School psychologists regret the lack of methodological support and mentoring (not only for early-career psychologists); they would welcome periodical and even some regular supervision.

Collaboration: School psychologists are often the only specialists educated in their field and they sometimes feel that other people in the schools do not understand them. They would appreciate a more collaborative relationship with teachers, school psychologists in other schools and advisory centers, doctors, clinical psychologists, academics, etc. Certainly, this situation is different for those who collaborate with regional advisory centers, either part-time or in close cooperation.

Financial support: School psychologists lack financial resources for diagnostic testing, training, books, and other materials. Furthermore, financing of school psychologists in the Czech Republic and Slovakia is not transparent; many of them are paid from European projects, while schools decline to pay any additional costs for psychologists’ education, literature, and materials from their budgets.

To conclude, it seems that the participating countries lack both a system of employing and financing school psychologists and a system for their education and support, especially in the Czech Republic and Slovakia. During the analysis, we encountered some differences in the values and answers of the three subsamples of this study, assuming that these are primarily due to the age of the respondents (Slovenians were on average the oldest and Slovaks the youngest group) or the years of experience with practicing professional psychology in schools (41.7% of Slovenian respondents reported 21 years or more years of professional experience, while the highest percentage reported by Czech and Slovak respondents was about 5 years of professional experience or less [53.2% and 72.3%, respectively]). These differences in age and experience demonstrate the longer tradition and higher stability of the system in Slovenia and a certain resemblance of the Czech and Slovak developments in school psychology.

Hamill and Weiner (2018) speculated that individual differences such as years of practice, training in EBP, national setting of professional practice, or other variables might influence psychologists’ attitudes towards EBP, just as in other health professions. We assume that the differences can be further explained by different systems of school psychologists’ employment. In the Czech Republic, for example, school psychologists often hold part-time or short contracts (they are often paid from project budgets) and work in two schools at the same time, whereas in Slovenia they are employed as full-time counsellors in a counselling service at a particular educational institution, either a preschool or a school. Moreover, the work of school psychologists seems to be “multi-tasking” and in answers to open questions, the respondents often expressed a “lack of time” for applying EBP. In addition, their professional roles may vary significantly from school to school, from region to region, and between different national and educational contexts (Hamill & Weiner, 2018; Hosp & Reschly, 2002).

Finally, it is important to highlight the fact that school psychologists from different countries may have limited access to research, guidelines, or theories due to language barriers and lack of national sources. Respondents from relatively small European countries participated in the study. Not all of them are able to read professional literature in English. In answers to open questions, they often mentioned the lack of national research and guidelines, and pointed out that not all research data and guidelines are applicable to their practice. This supports the notion that school psychology is in general a relatively new discipline (D’Amato, Zafiris, McConnell, & Dean, 2011; DuPaul, 2011).

When answering open questions, some respondents pointed out that EBP was not a major topic for them. They felt that the personality of the psychologist, his/her intuition, and the support and leadership/mentoring available are more important. They also often emphasized the gap between theory and practice. They reported that they frequently do not understand the results of a particular study and/or that some of these results are not applicable in practice (Kehle & Bray, 2005).

Conclusion

Intuition and individualized interventions are important aspects of working with individual clients or groups in all helping professions and will always remain irreplaceable in school psychology practice. Nevertheless, backing up practice with scientific and practical evidence is a big challenge. It is necessary to study EBP in school psychology in various countries so as to create, translate, adapt, and verify new findings and practical guidelines. Connections between academics and practical platforms can help in this respect. Scientists at universities and students of psychology can support practitioners to look for, apply, and develop relevant methodologies for the evaluation of the effectiveness of procedures. Therefore, the aim is not merely to enhance methodology and EBP development, but also to create opportunities for the exchange of experience, individual and collective mentoring, and development of appropriate attitudes towards EBP (Hamill & Weiner, 2018). Kratochwill and Shernoff (2004) accentuate the necessity to share responsibilities for such a demanding challenge as EBP development in school psychology among researchers, trainers, and practitioners. The Standing Committee Psychology in Education at EFPA has the potential to connect European academics and practitioners in school psychology and has already started to facilitate the exchange of experience, realizing that cross-cultural research plays an important role.

The results of the present research also show the importance of the stability of school systems for the use of EBP principles. It is important for school psychologists to be employed full-time, with long-term contracts and transparent financing, and to be relieved to a certain extent from workload (administrative tasks, provision of days off for study, supervision, meetings, and so on). If psychologists work under permanent stress and uncertainty, they do not have enough time and willingness to seek out and study professional literature and to create and verify new procedures.

Limitations

We are aware of the limitations of this research. First, the samples were relatively small, although representing a reasonable percentage of school psychologists in the countries considered, for the research was carried out in relatively small countries with rather low numbers of school/educational psychologists. A second limitation is the translation of the questionnaire into three languages, which may fail to capture the meaning of the items in all their nuances, despite our cooperation with qualified translators. The results indicating the use of evidence in practice do not address the validity and quality of theories, methodologies, guidelines, and other tools applied. We also have to consider what features are common to all our respondents who were willing to complete the questionnaire and reflect on their work. Although we do not want to express distrust, it is necessary to take into account the respondents’ attitudes to completing a questionnaire. That is, the use of evidence in practice is a positive feature of the profession, so the results may look more positive than reality. The influence of social desirability could also have partly biased the results. Finally, it should be noted that the respondents were predominantly women, which skewed the gender balance and possibly influenced the results (for a review, see Hamill & Weiner, 2018).

To conclude, this study shows that school psychologists who participated in the study are aware of the EBP in their psychological work at education institutions, meaning that they apply EBP to a certain degree in their everyday practice. From the results, it can be assumed that they are effective in (1) consulting and discussing their practice with other professionals (cooperationcategory), (2) using validated evidence while doing preventive, consultative, and other types of interventions, diagnostics, and providing support for teachers (usecategory), and (3) following professional guidelines based on their own professional experience (professional category). The results also imply that many of the respondents are not aware of the contemporary theoretical and empirical findings from literature (literaturecategory) and report weak access to the domains of professional activities mentioned above (availabilitycategory). Finally, the qualitative analysis shows that the respondents face different barriers in accomplishing their professional needs, including EBP implementation, among them the lack of time, resources and financing, and social/professional support and collaboration. The respondents cope with an excessive workload due to the complexities they face in their everyday practice.

Nevertheless, we are very positive about these research findings, even if they are based on preliminary descriptive results. In the coming year, we are going to discuss the findings with the respondents and other colleagues, addressing key professional problems in the framework of national contexts and transnationally. Equally important in this respect will be the development of appropriate attitudes of school psychologists towards EBP, because the research established a positive association with attitudes and engagement in EBS practice and training for a correct implementation of EBP (Hamill & Wiener, 2018). Moreover, we intend to develop specific professional guidelines for the systematic application of EBPP in preschool institutions and schools and to develop and enrich the presented research in its conceptualization and methodology, inviting colleagues from different European countries to collaborate. We plan to further develop our research and to contribute to the quality of the EBP of school psychologists in Europe and beyond.

References

American Psychological Association(APA), Presidential Task Force on Evidence-Based Practice. (2006). Evidence-based practice in psychology. American Psychologist, 61(4), 271–285. https://doi.org/10.1037/0003-066X.61.4.271

APA (2014). Guidelines for prevention in psychology – American Psychological Association. American Psychologist, 69(3), 285–296. https://doi.org/10.1037/a0034569

Bennett, S.,& Bennett, J. W. (2000). The process of evidence-based practice in occupational therapy: Informing clinical decisions. Australian Occupational Therapy Journal, 47, 171–180. https://doi.org/10.1046/j.1440-1630.2000.00237.x

Black, A.T., Balneaves, L.G., Garossino, C., Puyat, J.H., & Qian, H. (2014). Promoting evidence-based practice through a research training program for point-of-care clinicians. The Journal of Nursing Administration, 45(1), 14–20. https://doi.org/10.1097/NNA.0000000000000151

Blair, E. (2015). A reflexive exploration of two qualitative data coding techniques. Journal of Methods and Measurement in the Social Sciences, 6(1), 14–29. https://doi.org/10.2458/v6i1.18772

Bornstein, R.F. (2017). Evidence-based psychological assessment. Journal of Personality Assessment, 99(4), 435–445. https://doi.org/10.1080/00223891.2016.1236343

Briner, R. (2000). Evidence-based human resource management. In L. Trinder & S. Reynolds (Eds.), Evidence-based practice: A critical appraisal(pp. 184–211). Oxford, UK: Blackwell Science. https://doi.org/10.1002/9780470699003.ch9

Brug, J., Campbell, M., & van Assema, P. (1999). The application and impact of computer-generated personalized nutrition education: A review of the literature. Patient Education and Counseling, 36(2), 145–56. https://doi.org/10.1016/S0738-3991(98)00131-1

Cook, S.C., Schwartz, A.C., & Kaslow, N.J. (2017). Evidence-based psychotherapy: Advantages and challenges. Neurotherapeutics, 14(3), 537–545. https://doi.org/10.1007/s13311-017-0549-4

Cochrane A.L. (1972). Effectiveness and efficiency: Random reflections on health services. London, UK: Nuffield Provincial Hospitals Trust.

Correa-de-Araujo, R. (2016). Evidence-based practice in the United States: Challenges, progress, and future directions. Health Care Women Int., 37(1), 2–22. https://doi.org/10.1080/07399332.2015.1102269

Cournoyer, B.,& Powers, G. (2002). Evidence-based social work: The quiet revolution continues. In A. R. Roberts & G. Greene (Eds.), Social workers´ desk reference(pp. 798–807). New York, NY: Oxford University Press.

D’Amato, R.C., Zafiris, C., McConnell, E., & Dean, R.S. (2011). The history of school psychology: Understanding the past to not repeat it. In M. A. Bray & T. J. Kehle (Eds.), The Oxford handbook of school psychology (pp. 9–46). New York, NY: Oxford University Press. https://doi.org/10.1093/oxfordhb/9780195369809.013.0015

DuPaul, G.J. (2011). School psychology as a research science: Are we headed in the right direction?Journal of School Psychology 49, 739–744. https://doi.org/10.1016/j.jsp.2011.11.001

Ebert, J.F., Huibers, L., Christensen, B., & Christensen, M.B. (2018). Paper- or web-based questionnaire invitations as a method for data collection: Cross-sectional comparative study of differences in response rate, completeness of data, and financial cost. Journal of Medical Internet Research, 20(1).https://doi:10.2196/jmir.8353

Eurydice (2019, March 27). Slovenia: Guidance and counselling in early childhood and school education. Retrieved from https://eacea.ec.europa.eu/national-policies/eurydice/content/guidance-and-counselling-early-childho...

European school psychologists improve lifelong learning – ESPIL (2010). Education, training, professional profile and service of psychologists in the European educational system. Brussels, Belgium: European Federation of Psychologists Associations (EFPA).

Farrell, P. (2004). School psychologists: Making inclusion a reality for all. School Psychology International, 25(1), 5–19. https://doi.org/10.1177/0143034304041500

Geddes, J. (2000). Evidence-based practice in mental health. In L. Trinder & S. Reynolds (Eds.), Evidence-based practice: A critical appraisal(pp. 66–88). Oxford, UK: Blackwell Science. https://doi.org/10.1002/9780470699003.ch4

GDP per capita in PPS (2018). Eurostat. Retrieved from https://ec.europa.eu/eurostat/tgm/table.do?tab=table&plugin=1&language=en&pcode=tec00114

Gregorčič Mrvar, P.,& Mažgon, J. (2017). The role of the school counsellor in school–community collaboration: The case of Slovenia. International Journal of Cognitive Research in Science, Engineering and Education, 5(1), 19–29. https://doi.org/10.5937/IJCRSEE1701019G

Hamill, N.R., & Wiener, K.K.K. (2018). Attitudes of psychologists in Australia towards evidence-based practice in psychology. Australian Psychologist, 53, 477–485. https://doi.org/10.1111/ap.12342

Hayes, S.C., Barlow, D.H., & Nelson-Gray, R.O. (1999). The scientist practitioner: Research and accountability in the age of managed care(2nd ed.) Needham Heights, MA: Allyn & Bacon.

Hemphill, J.F. (2003). Interpreting the magnitudes of correlation coefficients. American Psychologist, 58(1), 78–80. https://doi.org/10.1037/0003-066X.58.1.78

Hoagwood, K., & Johnson, J. (2003). School psychology: A public health framework I. From evidence-based practices to evidence-based policies. Journal of School Psychology, 41(1), 3–21. https://doi.org/10.1016/S0022-4405(02)00141-3

Hosp, J.L., & Reschly, D.J. (2002). Regional differences in school psychology practice.School Psychology Review, 31 (1), 11–29.

Institute of Medicine. (2001). Crossing the quality chasm: A new health system for the 21st century.Washington, DC: National Academy Press.

Kehle, T.J., & Bray, M.A. (2005). Reducing the gap between research and practice in school psychology. Psychology in the Schools,42(5), 577–584. https://doi.org/10.1002/pits.20093

Kratochwill, T., & Shernoff, E. (2004). Evidence-based practice: Promoting evidence-based interventions in school psychology. School Psychology Review,33(1), 34–48.

Lakens D. (2013). Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Frontiers in Psychology, 4, 863. https://doi.org/10.3389/fpsyg.2013.00863

Lilienfeld, S.O., Ammirati, R., & David, M. (2012). Distinguishing science from pseudoscience in school psychology: Science and scientific thinking as safeguards against human error. Journal of School Psychology, 50(1), 7–36. https://doi.org/10.1016/j.jsp.2011.09.006

Liu, S., & Oakland, T. (2016). The emergence and evolution of school psychology literature: A scientometric analysis from 1907 through 2014. School Psychology Quarterly, 31,104–121. https://doi.org/10.1037/spq0000141

Maher, C.G., Sherrington, C., Elkins, M., Herbert, R.D., & Moseley, A.M. (2004). Challenges for evidence-based physical therapy: Accessing and interpreting high-quality evidence on therapy. PhysicalTherapy, 87(7), 644–654.

Mason, D. J., Leavitt, J. K., & Chaffee, M. W. (Eds.). (2002). Policy & Politics in Nursing and Health Care. St Louis, MO: Saunders.

McKevitt, B. C. (2012). School psychologists’ knowledge and use of evidence-based, social-emotional learning interventions. Contemporary School Psychology, 16(1), 33–45.

Okpych, N.,& L-H Yu, J. (2014). A historical analysis of evidence-based practice in social work: The unfinished journey toward an empirically grounded profession. Social Service Review,88,3–58. https://doi.org/10.1086/674969

Patterson, D.S., Dulmus, C.N., & Maguin, E. (2012). Empirically supported treatment's impact on organizational culture and climate. Research on Social Work Practice, 22(6), 665–671. https://doi.org/10.1177/1049731512448934

Regulation (2005). Regulation 72/2005 on the provision of consultancy in schools and school consulting establishments. [Vyhláška 72/2005 Sb o poskytování poradenských služeb ve školách a školských poradenských zařízeních]. MŠMT.

Sackett, D.L., Rosenberg, W.M., Gray, J.A., Haynes, R.B., & Richardson,W.S. (1996). Evidence based medicine: What it is and what it isn't. British Medical Journal, 312, 71–72. https://doi.org/10.1136/bmj.312.7023.71

Silverman, D. (2000). Doing qualitative research: A practical handbook. London, UK: Sage.

Schaeffer, C.M., Bruns, E., Weist, M., Stephan, S.H., Goldstein, J., & Simpson, Y. (2005). Overcoming challenges to using evidence-based interventions in schools. Journal of Youth and Adolescence,34(1),15–22. https://doi.org/10.1007/s10964-005-1332-0

Shaywitz, S. (The Yale Center for Dyslexia & Creativity) (2014, September 18). Evidence-based vs research based programs for dyslexics[Video file]. Retrieved from https://www.youtube.com/watch?v=nbQ9wAtTxlU

Shernoff, E.S., Bearman, S.K.,& Kratochwill, R.T. (2017). Training the next generation of school psychologists to deliver evidence-based mental health practices: Current challenges and future directions. School Psychology Review, 46(2), 219–232. https://doi.org/10.17105/SPR-2015-0118.V46.2

Strauss, A., & Corbin, J. (1998). Basics of qualitative research(2nd ed.). Thousand Oaks, CA: Sage.

Tabachnick, B.G., & Fidell, L.S. (2007). Using multivariate statistics(5th ed.). Boston, MA: Allyn & Bacon.

Thomas G.,& Pring, R. (Eds.). (2004). Evidence-based practice in education. Maidenhead, UK: Open University Press.

Total general government expenditure on education, 2017(2017). Eurostat. Retrieved from https://ec.europa.eu/eurostat/statistics-explained/images/6/66/Total_general_government_expenditure_...

White, J.J.,& Kratochwill, T.R. (2005). Practice guidelines in school psychology: Issues and directions for evidence-based interventions in practice and training. Journal of School Psychology, 43,99–115. https://doi.org/10.1016/j.jsp.2005.01.001

Notes

1. For the purpose of this paper the term “school psychologist” is defined as follows: “The psychologist in the educational system is a professional psychologist with a Master’s degree in psychology and expertise in the field of education.” (European school psychologists…, 2010, p. 8).

To cite this article: Juriševič, M., Lazarová, B., Gajdošová, E. (2019). Evidence–Based Practice for Psychologists in Education: A Comparative Study from the Czech Republic, Slovakia, and Slovenia. Psychology in Russia: State of the Art, 12(4), 79–100.

The journal content is licensed with CC BY-NC “Attribution-NonCommercial” Creative Commons license.