Gaze-and-brain-controlled interfaces for human-computer and human-robot interaction

Abstract

Background. Human-machine interaction technology has greatly evolved during the last decades, but manual and speech modalities remain single output channels with their typical constraints imposed by the motor system’s information transfer limits. Will brain-computer interfaces (BCIs) and gaze-based control be able to convey human commands or even intentions to machines in the near future? We provide an overview of basic approaches in this new area of applied cognitive research.

Objective. We test the hypothesis that the use of communication paradigms and a combination of eye tracking with unobtrusive forms of registering brain activity can improve human-machine interaction.

Methods and Results. Three groups of ongoing experiments at the Kurchatov Institute are reported. First, we discuss the communicative nature of human-robot interaction, and approaches to building a more e cient technology. Specifically, “communicative” patterns of interaction can be based on joint attention paradigms from developmental psychology, including a mutual “eye-to-eye” exchange of looks between human and robot. Further, we provide an example of “eye mouse” superiority over the computer mouse, here in emulating the task of selecting a moving robot from a swarm. Finally, we demonstrate a passive, noninvasive BCI that uses EEG correlates of expectation. This may become an important lter to separate intentional gaze dwells from non-intentional ones.

Conclusion. The current noninvasive BCIs are not well suited for human-robot interaction, and their performance, when they are employed by healthy users, is critically dependent on the impact of the gaze on selection of spatial locations. The new approaches discussed show a high potential for creating alternative output pathways for the human brain. When support from passive BCIs becomes mature, the hybrid technology of the eye-brain-computer (EBCI) interface will have a chance to enable natural, fluent, and the effortless interaction with machines in various fields of application.

Received: 16.05.2017

Accepted: 11.06.2017

Themes: Cognitive psychology

PDF: http://psychologyinrussia.com/volumes/pdf/2017_3/psych_3_2017_9.pdf

Pages: 120-137

DOI: 10.11621/pir.2017.0308

Keywords: attention, eye-to-eye contact, eye movements, brain-computer interface (BCI), eye-brain-computer interface (EBCI), electroencephalography (EEG), expectancy wave (E-wave), human-robot interaction, brain output pathways

Introduction

No matter how rich our inner world, the intention to interact with objects in the external world or to communicate with others has to be implemented through the activity of peripheral nerves and muscles. Thus, motor system disorders, such as Amyothrophic Lateral Sclerosis (ALS) or a number of other diseases and traumas, seriously impair interaction with the external world, up to its full disruption in so-called Completely Locked-In Syndrome (CLIS). Of course, these clinical conditions are extremely rare. However, the problem of tight biological constraints on the motor output system frequently arises in the working life of many professionals, such as surgeons during an endoscopic operation or military servicemen on a battlefield: when both hands are busy with instruments, one suddenly wishes to possess something like a “third hand”. Because of constraints imposed by the motor system’s information transfer limits, the dependence of interaction on the motor system can be considered as a significant limitation in systems that combine natural human intellect and machine intelligence. This is akin to the philosophical stance of Henry Bergson (1907/2006), who emphasized the opposition of mental and physical efforts. Up to the present day, the effects of physical workload on mental activity have been little studied (DiDomenico, & Nussbaum, 2011). Nevertheless, we suspect that the physical load required for interaction with technologies can interfere with mental work, at least with kinds of mental work that require the highest concentration and creativity.

In this review, we consider basic elements of a technology that largely bypass the motor system and can be used for interaction with technical devices and people, either instead of normal mechanical or voice-based communication or in addition to it. More than two decades ago, one of us anticipated such aspects of the current development as the role of communication pragmatics for human-robot interaction (Velichkovsky, 1994) and the combination of eye tracking with brain imaging (Velichkovsky & Hansen, 1996). This direction is exactly where our work at Kurchatov Institute has been moving in recent years. In this review, we will focus primarily on our own studies, but discuss them in a more general context of contributions to the field by many researchers around the world. In particular, we will address requirements that are crucial for interaction with dynamic agents and not just with static devices. Even with simple geometric figures, motion dramatically enhances the observer’s tendency to attribute intentionality (e.g.,Heider & Simmel, 1944). This makes communication paradigms especially important in development of human-robot interaction systems.

Basic issues and approaches

Brain-Computer Interfaces (BCIs)

Many attempts have been made to bypass the usual pathway from the brain to a machine, based on manual activation of a keyboard, a mouse, a touchscreen, or any other type of mechanically controlled device. The most noticeable area of technology that has emerged as the result of such attempts is the Brain-Computer Interface (BCI), systems that offer fundamentally new output pathways for the brain (Brunner et al., 2015; Lebedev, & Nicolelis, 2017; Wolpaw, Birbaumer, McFarland, Pfurtscheller, & Vaughan, 2002). Among BCI studies, the most impressive achievements were based on the use of intracortically recorded brain signals, such as the simultaneous control by a patient for up to 10 degrees of freedom in a prosthetic arm (Collinger et al., 2013; Wodlinger et al., 2014). However, invasive BCIs are associated with high risks. The technology is yet not ready to be accepted even by severely paralyzed patients (Bowsher et al., 2016; Lahr et al., 2015; Waldert, 2016). This will possibly not change for years or even decades to come.

Noninvasive BCIs are based mainly on the brain’s electric potentials recorded from the scalp, i.e., the electroencephalography (EEG). The magnetoencephalography (MEG) might offer a certain improvement over the EEG, but it is currently not practical due to its bulk and the fact that the equipment is very expensive. Similar difficulties arise with functional Magnetic Resonance Imaging (fMRI), which is too slow for communication and control of machines by healthy people; Near Infra-Red Spectroscopy (NIRS) is also too slow. Low speed and accuracy are currently associated with all types of noninvasive BCIs, and information transfer rate of even the fastest noninvasive BCIs is, unfortunately, much below the levels typical for the use of mechanical devices for computer control. Even a record typing speed for a non-invasive BCI, more than twice as fast as the previous record, still was only one character per second (Chen, Wang, Nakanishi, Gao, Jung, & Gao, 2015).

Non-invasive BCIs and eye movements

Even more important than the low speed and accuracy of most effective non-invasive BCIs is the fact that any substantial progress in performance has so far been associated only with BCIs that use visual stimuli. In such BCIs, the user is typically presented with stimuli repeatedly flashed on a screen at different positions associated with different commands or characters. In the BCI based on the P300 wave of event-related potentials (ERPs), flashes are presented at irregular intervals at time moments that differ for all positions or for different groups of positions. For example, if the BCI is used for typing, a matrix with flashing letters can be used. To type a letter, a user has to focus on it and notice its flashes; ERP to stimuli presented at different positions are compared, and the strongest response indicates the attended position. Many modifications to the P300 BCI have been proposed (Kaplan, Shishkin, Ganin, Basyul, & Zhigalov, 2013), whereby mostly aperiodic visual stimuli at different positions were used. Many P300 BCI studies involve healthy participants who automatically orient not only their focus of attention (a prerequisite for P300) but also their gaze toward the target. If such a participant is instructed to voluntarily refrain from looking at the stimulus (Treder, & Blankertz, 2010), or if a P300 BCI is used by a patient with impaired gaze control (Sellers, Vaughan, & Wolpaw, 2010), or if nonvisual modalities are used (Rutkowski, & Mori, 2015; Rutkowski, 2016), performance drops dramatically compared to the use of the P300 BCI when it is possible to foveate visual stimuli.

Another BCI technology is called the Steady-State Visual Evoked Potential BCI (SSVEP BCI; Gao, Wang, Gao, & Hong, 2014; Chen et al., 2015). It has relatively high accuracy and speed and employs periodic stimuli with different frequencies, which can in turn be found in the EEG response that these stimuli evoke. Other effective BCIs include the visual-ERP-based BCI developed in the mid-1970s (Vidal, 1973), the pseudorandom code-modulated Visual Evoked Potential BCI that uses complex temporal patterns of visual stimulation (cVEP BCI; Bin et al., 2011; Aminaka, Makino, & Rutkowski, 2015), and our “single-stimulus” BCI for rapidly sending a single command from only one position where a visual stimulus is presented aperiodically (Shishkin et al., 2013; Fedorova et al., 2014). In all these BCIs, responses of the visual cortex to visual stimuli are used explicitly. Although attention modulates these responses, and can be used separately from gaze (as in SSVEP BCI; see Lesenfants et al., 2014), the performance of these BCIs again drops greatly if foveating is prevented.

Enhancement of control by using eye movements contradicts the strict definition of a BCI as “a communication system in which messages or commands that an individual sends to the external world do not pass through the brain’s normal output pathways of peripheral nerves and muscles” (Wolpaw et al., 2002). However, practical reason does not always stay within the limits of formal definitions. The oculomotor system is closely connected to the brain’s attentional networks and is anatomically distinct from the main motor system (Parr, & Friston, 2017). In clinical conditions such as ALS, many paralyzed patients can control their gaze sufficiently well to benefit from gaze-based enhancement of BCIs. Moreover, gaze itself can be used, overtly and directly, to control computers, robots, and other machines, without any external stimulation and typically faster than with a BCI.

Gaze-based interaction

The main component of gaze-based interaction with technical systems and communication with other people is videoculography – eye tracking using a video camera that makes it possible to trace the position of the pupil and, based on that basis, to estimate the gaze coordinates. This technology is noninvasive and does not require attaching any sensors to the user’s skin. Head movement restriction, once crucially important for obtaining high-quality data, is becoming less strict with the progress of technology, so a completely remote and non-constraining registration of eye movements is no longer unusual. Although under development for decades, in parallel to BCIs, eye-tracking technology was too expensive for use in consumer products, with the rare exceptions of communication systems for paralyzed persons (Pannasch, Helmert, Malischke, Storch, & Velichkovsky, 2008). Affordable eye trackers with sufficient capacities have recently appeared on the market, and applications of eye tracking are being developed for virtual and augmented reality helmets and even for smartphones, with the control and/or communication function considered as the most important. Jacob and Karn noted (2003, p. 589), “Before the user operates any mechanical pointing device, … the eye movement is available as an indication of the user’s goal”. This was demonstrated quantitatively in a number of studies; for example, it was shown that users tend to fixate on a display button or a link prior to approaching them manually or with the mouse cursor (Huang, White, & Buscher, 2012). It is not uncommon in interaction with computers that the mouse leads the gaze, but this is observed mainly for well-known locations (Liebling, & Dumais, 2014). Generally, visual fixations at an action location prior to the action are observed when objects in the physical world are being explored and manipulated (Johansson, Westling, Bäckström, & Flanagan, 2001; Land, Mennie, & Rusted, 1999; Neggers, & Bekkering, 2000; Velichkovsky, Pomplun, & Rieser, 1996).

In some practical applications, such as typing, gaze-based approaches became effective years ago (Bolt, 1982; Jacob, 1991; Velichkovsky, Sprenger, & Unema, 1997). Noninvasive BCI systems still cannot offer a level of speed, accuracy, and convenience similar to those of gaze typing systems. When targets are not too small, they can be selected using an eye tracker even faster than with a computer mouse (Ware, & Mikaelian, 1987; Sibert, & Jacob, 2000). A system can be tuned to respond to very short gaze dwells, e.g., 150–250 ms, so that the user gets a feeling of “a highly responsive system, almost as though the system is executing the user’s intentions before he expresses them” (Jacob, 1991, p. 164). There is, however, a danger in such an extreme tuning, as it can lead to a vanishing sense of agency and abrupt deterioration of performance (Velichkovsky, 1995). Obviously an optimal threshold value has to be found in every particular case (Helmert, Pannasch, & Velichkovsky, 2008).

The Midas touch problem and natural gaze interaction

The most fundamental problem associated with eye-tracker-based interaction is known as the Midas touch problem: if an interface interprets visual fixation as a command, “you cannot look anywhere without issuing a command” (Jacob, 1991, p. 156). In the case of typing, areas outside a virtual keyboard can be made nonresponsive to gaze, so a user can simply avoid looking at the keyboard when he or she is not going to type; but it is difficult to avoid looking at the responsive area all the time. In a dynamic environment, e.g., in the case of robot control, or when the locations of response keys and areas for presenting visual information are close to each other, the Midas touch problem may make the interface annoying and inefficient. This is because the main function of gaze is to explore the visible environment, and this function normally is not under conscious control (Findlay, & Gilchrist, 2003). The eyes also move in an uncontrolled manner when we are thinking (Ehrlichman, & Micic, 2012; Walcher, Körner, & Benedek, 2017).

If interface is too responsive, its behavior becomes highly unnatural, because people “expect to be able to look at an item without having the look ‘mean’ something” (Jacob, 1991, p. 156). Almost all known means of avoiding the Midas touch problem require that the user move his or her eyes not naturally, but according to learned patterns (e.g., learned sequences of saccade directions or long dwells, e.g., 500 ms or longer), so the use of the interface often becomes tiresome and/or relatively slow (Majaranta, & Bulling, 2014). Jacob searched for patterns in non-instructed gaze behavior that can be used by the system to infer the user’s goals. This led him to find that object selection using as short as 150-250 ms gaze dwell time works fine when selection can be easily undone (Jacob, 1991). Given that the eyes automatically got fixated for such a short time, very frequently without the intention to select anything, it was not surprising that it was he who coined the term “the Midas touch problem”.

Is it possible to make eye movement input to the interface natural, and also the interface’s response? Jacob (1993) noted that this is the case when interaction is organized similarly to how people respond to another person’s gaze, although this approach is usually difficult to implement; for example, in one study, interaction was constructed in an analogy to a tour guide who estimates the visitor’s interests by his or her gazes (Starker, & Bolt, 1990). It is likely that the basic function of gaze control which makes possible the use of gaze for interaction with machines is related not to vision, but to communication (Velichkovsky, Pomplun, & Rieser, 1996; Zhu, Gedeon, & Taylor, 2010), so it might be useful to learn more from communicative gaze behavior. This approach has attracted little attention over the decades of gaze interaction technology development, although it could lead to radical solutions of the Midas touch problem.

New developments at the Kurchatov Institute

Communicative gaze control of robots

Gaze alone – without speech, hand gestures, and (rarely, under natural conditions) without head movements – is used by humans to convey to other humans certain types of deictic information, mainly about spatial locations of interest. In everyday life, the role of this ability is, of course, far less prominent than the role of speech, but it can be comparable or more efficient for spatial information (Velichkovsky, 1995). The face and, especially, the eyes are powerful attractors of attention, stronger than the physical contrasts and semantic relations between the perceived objects (Velichkovsky et al., 2012). In particular, eye-to-eye contact is known to mobilize evolutionarily newer brain structures, an effect that can be observed even in humans who are facing an agent that is clearly virtual (Schrammel, Graupner, Mojzisch, & Velichkovsky, 2009). It was demonstrated that humans are very sensitive to a robot’s gaze behavior, while they perfectly well realize that a robot is merely a machine: participants who were asked to judge a rescue robot’s behavior felt more support from it when it “looked” at them (Dole et al., 2013) and emotional expression transfer from android avatars to human subjects was observed only in the case of simulated eye-to-eye contacts (Mojzisch, Schilbach, Helmert, Velichkovsky, & Vogeley, 2007).

An approach proposed for robot control by our group (Fedorova, Shishkin, Nuzhdin, & Velichkovsky,2015; Shishkin, Fedorova, Nuzhdin, & Velichkovsky,2014) was based on the developmental studies of joint attention, i.e., “simultaneous engagement of two or more individuals in mental focus on one and the same external thing” (Baldwin, 1995). The importance of joint attention was first noted by L.S. Vygotsky, and it remains an important focus of modern research (Carpenter, & Liebal, 2011; Tomasello, 1999). Importantly, joint attention gaze patterns are fast, and can function effectively even under high cognitive load (Xu, Zhang, & Geng, 2011). Last but not least, the Midas touch problem has not been observed with gaze communication in the joint attention mode, i.e., unintended eye movement caused by distractors or by lapses of attention do not normally lead to misinterpretation of information conveyed through gaze in this mode (Velichkovsky, Pomplun, & Rieser, 1996).

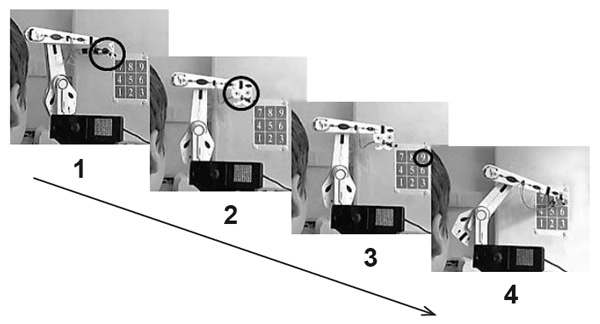

Although the human’s ability to use eye movements for communication and control through eye-tracking technology has been addressed in the literature (Velichkovsky, 1995; Zhu, Gedeon, & Taylor, 2010), this understanding did not lead to making use of communicative gaze patterns in such a way that a machine is considered as a partner rather than a tool. We implemented joint attention patterns for control of a simple robot arm (R12-six, ST Robotics, UK). Because in two-way gaze communication a partner should have something that is perceived as eyes, with relevant “gaze behavior”, a plain paper mask with “eyes” was attached to the robot’s hand to provide it with certain anthropomorphic features (Fig. 1). The participant’s eyes were tracked with a desktop eye tracker (Eyelink 1000 Plus, SR Research, Canada). The robot arm could be controlled by gaze patterns (here, predefined sequences of gaze fixations).

Figure 1. Gaze-based “communication” with a robot arm: a series of views from behind a participant (after Fedorova et al., 2015)

In the study, “communicative” patterns were compared with “instrumental” patterns. Specifically, “communicative”patterns were based on joint attention gaze patterns and included looking at the robot’s “eyes” (see Figure 1). The pattern started from an “activating” gaze dwell of 500 ms or longer at the robot’s “head” (1), and continued with the robot’s turn toward the participant with resulting “eye-to-eye” contact (2). The immediately following human visual fixation location in the working field (3) was registered by the robot as the goal of the action required, so the robot pointed at the target with its “nose” as an emulated work instrument (4). In the “instrumental” pattern compared with this “communicative” pattern, a dwell on the “button” led to its lighting up and the robot’s turning to a preparatory position, but without “looking” at the participant.

Participants were only told that the robot can be controlled using eye gaze and that they had to find a way, using their gaze only, to make the robot point at the target. Although not aware of what specifically they had to do with their eye movements, participants easily found both “communicative” and “instrumental” patterns, but showed no preference for either of them. However, it appeared from their reports that the robot’s response to the “communicative” pattern was surprising and evoked the vivid impression that the robot shared their intention. This is distinct from what can be expected from a mechanical device; therefore, it may take time to get used to it. The study protocol included no practice and no testing of the hypothesis that “communicative” control can suppress gaze control’s vulnerability to distractors and help to avoid the Midas touch problem. To decide whether it really offers significant benefits over the known strategies, further experiments are needed. Nevertheless, the present study confirmed the feasibility of “communicative” gaze control of robot behavior.

Selection of a moving target from a swarm

Interaction between a human operator and robot swarms has become an important area of human-machine interaction studies (Kolling, Nunnally, & Lewis, 2012). When an operator interacts with a large group of moving objects – in our case, the robots shown on a screen – selection of one of them with a mechanical pointer to receive detailed information from it or to send it a distinct command might not be an easy task, especially if they move in different directions, on different trajectories, and with varying speed. Fortunately, this situation is a special case of multiple objects tracking (MOT), fairly well investigated in cognitive science by Zenon Pylyshyn and his colleagues (e.g., Keane & Pylyshyn, 2006), who demonstrated that this task can be solved very quickly and in preattentive mode.

Following a moving object of interest may constitute a special case where interest in an object may be sufficient to select it by gaze in a natural way, without making artificially long static visual fixations. In this case, the gaze especially easily orients toward a moving object of interest and follows it continuously with high precision and without apparent effort, by using a distinct category of eye movements, the so-called dynamic visual fixations, or smooth pursuit (Brielmann, & Spering, 2015; Yarbus, 1967).

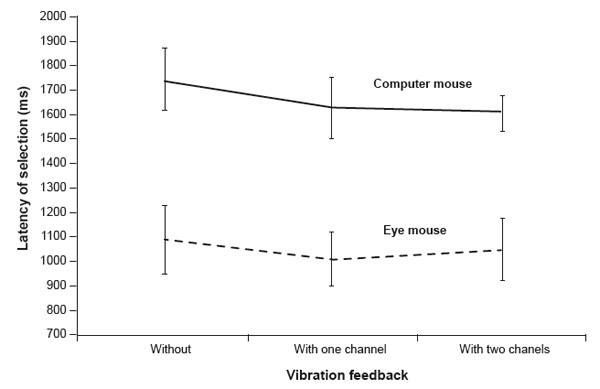

The use of smooth pursuit was recently considered to be of importance for interaction with technical devices (Esteves, Velloso, Bulling, & Gellersen, 2015). Its application to the robot selection problem was elaborated by our group (Zhao, Melnichuk, Isachenko, & Shishkin, 2017). In a preliminary study, robots were simulated on a computer screen by 20 balls; each of them was 2.5 deg in diameter and moving at a speed of 9 deg/s. Each ball had its own trajectory, changing direction each time it collided with other balls or with the screen’s edges. By default, balls were gray, a target ball was indicated by red color, and selection changed the color to green. Selection was made with a cursor that was controlled, in different experiment conditions, either by a computer mouse or by gaze (an eye mouse). To select a ball, the cursor had to be closer to it than a threshold for 500 ms. Three different conditions of vibration-based feedback for a successful selection were used: without vibration, vibration in one or in two channels. With the computer mouse, the task required an average of about 1.7 s, while gaze-based selection was significantly faster, 1.1 s (Figure 2). Vibration feedback seemed to play no role in the selection efficiency.

Figure 2. Time to selection of one of a number of moving targets in dependence on the output device and the vibration feedback

Perhaps perception of robots as animated autonomous agents is not necessary to enable interaction, as interest in the object and its movements may be sufficient to initiate and maintain smooth pursuit. In further studies, we will try to enhance moving robot selection using the EEG marker of intention, which is described in the next section. Another technical issue is that the selection of real objects in space needs a version of 3D binocular eye tracking, so algorithms for solving this classic measurement task have to be adopted (Wang, Pelfrey, Duchowski, & House, 2014; Weber, Schubert, Vogt, Velichkovsky, & Pannasch, 2017). In our current studies, we also aim at obtaining a shorter selection time by using advanced selection algorithms that were proposed for smooth-pursuit-based gaze interaction (Esteves et al., 2015).

Hunting for intention in the human brain

Velichkovsky and Hansen were the first authors who proposed solving the Midas touch problem by combining gaze-based control with a BCI: “point with your eye and click with your mind!” (Velichkovsky, & Hansen, 1996, p. 498). A number of research groups then tried to implement this idea by combining eye-tracker-based input with one of the existing BCIs, but the BCI component always added to the resulting hybrid system the worst features of non-invasive BCIs, namely low speed and accuracy, and the combination was not successful. A game-changing approach was proposed by Zander, based on his idea of “passive” BCIs that do not require the user’s attention. Passive BCIs (Zander, & Kothe, 2011) monitor the user’s brain state and react to it rather than to the user’s explicit commands. In this approach, the BCI classified gaze fixations as spontaneous or intentional (Ihme, & Zander, 2011; Protzak, Ihme, & Zander, 2013), presumably by using an EEG response related to expectation of the gaze-controlled interface feedback, or, paradoxically, expectation is used to trigger the action that is expected.

The most relevant EEG phenomenon was discovered about 50 years ago by Grey Walter. He used an experimental paradigm in which a warning stimulus preceded an imperative stimulus (one requiring a response) with a fixed time interval between them. This component, a slow negative wave, was called the Contingent Negative Variation (CNV). As early as 1966, Walter proposed, in an abstract for the EEG Society meeting, that the expectancy wave (E-wave), the non-motor part of CNV, “can be made to initiate or arrest an imperative stimulus directly, thus by-passing the operant effector system” (Walter, 1966, p. 616). The E-wave, or the Stimulus-Preceding Negativity (SPN) ─ this latter name was introduced for a slightly different experimental paradigm (Brunia, & Van Boxtel, 2001) ─ is what can be expected to appear in the gaze fixations intentionally used for interaction.

Zander’s group did not study this EEG marker in detail, and their experimental design included only visual search. In this condition, the P300 wave appears in fixations on targets, allowing the researcher to differentiate target and non-target fixations by this, very different EEG component (Kamienkowski, Ison, Quiroga, & Sigman, 2012; Brouwer, Reuderink, Vincent, van Gerven, & van Erp, 2013; Ušćumlić, & Blankertz, 2016). While the combination of the P300 and gaze fixation also may be a promising tool for human-machine interaction, it cannot be used for sending commands to machines deliberately. In addition, dwell time used in these studies was too long (1 s, in Protzak, Ihme, & Zander, 2013). Therefore, we designed a study where gaze dwells with a shorter threshold (500 ms) were used to trigger freely chosen actions.

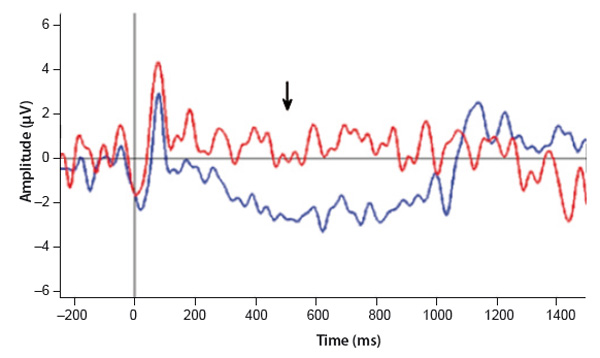

In our research (Shishkin et al., 2016; Velichkovsky et al., 2016), spontaneous and intention-related gaze dwells were collected when the participants played EyeLines, a gaze- controlled version of the computer game Lines. In EyeLines, each move consists of three gaze dwells: (1) switching the control on by means of a gaze dwell at a remote “switch-on” location, (2) selection of one of the balls presented in the game field, (3) dwell on a free cell to which the ball had to be moved. After the ball was moved and before the next fixation on the “switch-on” location, no fixation had any effect, so that spontaneous fixations could be collected. Special efforts were made to ensure that eye-movement-related electrophysiological artifacts did not affect the analyzed EEG intervals. In all participants, a negative wave was indeed discovered in the gaze dwells used for control and it was absent or had lower amplitude in the spontaneous fixations. The results are shown in Figure 3. Note that the waveforms reveal no signs of P300. Based on statistical features extracted from 300 ms EEG intervals (200-500 ms relative to dwell start), intentional and spontaneous dwells could be classified on a single-trial basis with accuracy much greater than the random level (Shishkin et al., 2016).

Figure 3. Fixation-related brain potentials (POz, grand average, n = 8) for gaze dwells intentionally used to trigger actions are shown by the blue line and for spontaneous fixations by the red line. “0” corresponds to dwell start; visual feedback was presented at 500 ms, corresponding to the arrow position (after Shishkin et al., 2016; Velichkovsky et al., 2016).

In subsequent studies, we found that the EEG marker for the gaze dwells intentionally used for control does not depend on gaze direction (Korsun et al., 2017) and demonstrated that initially chosen approaches to construct feature sets and the classifier can be further improved (Shishkin et al., 2016a). Preliminary attempts to classify the 500 ms gaze dwells online using the passive expectation-based BCI (Nuzhdin et al., 2017a; Nuzhdin et al., 2017 in press) so far have not shown a significant improvement compared to gaze alone. This could be related to a suboptimal classifier and/or inadequate choice of tests, because in the game we used, actions were quickly automated at the beginning of our research, while SPN amplitude is likely to decrease precisely under such conditions. We are now improving the classifier and preparing better tests to investigate in detail the capacity and limitations of the eye-brain-computer interface (EBCI), as we call this new hybrid system. If this line of applied cognitive research is successful, it could result in interfaces responding to the user’s intentions more easily and without annoying, unintended activations.

Conclusion

We discussed here ways to bypass the common limits of the brain motor system in human-machine interaction. Invasive BCIs can be surprisingly efficient, but their use may remain too risky for decades to come. Noninvasive BCIs are also not well suited for this purpose, and their progress in performance by healthy users is critically dependent on gaze’s impact upon the selection of spatial locations. In our overview of the new developments at the Kurchatov Institute, we discussed the communicative nature of human-robot interaction and approaches to build a more efficient technology on this basis. Specifically, “communicative” patterns of interaction can be based on joint attention paradigms from developmental psychology, and including a mutual exchange eye-to-eye “looks” between human and robot. Further, we provided an example of eye mouse superiority over the computer mouse, here in emulating the task of selecting a moving robot from a swarm. Finally, a passive noninvasive BCI that uses EEG correlates of intention was demonstrated. This may become an important filter to separate intentional gaze dwells from non-intentional ones. These new approaches show a high potential for creating alternative output pathways for the human brain. When support from passive BCIs matures, the hybrid ECBI technology will have a chance to enable natural, fluent, and effortless interaction with machines in various fields of application.

According to Douglas Engelbart (1962), intellectual progress often depends on reducing the efforts needed for interaction with artificial systems. Centered on this idea, he developed basic elements of the modern human-computer interface, such as the mouse, hypertext, and the elements of the graphical user interface. These tools have radically improved humans’ interaction with computers and partially with robots, but they have not completely excluded physical efforts from the interaction process. Could we become even more effective in solving intellectual tasks, at least when mental concentration is crucial, if our collaboration with technical devices were totally free of physical activity? Further studies are needed to answer this question, but cognitive interaction technologies seem to be becoming advanced enough to conduct such experiments in the near future.

Acknowledgments

This study was in part supported by the Russian Foundation for Fundamental Research (RFBR ofi-m grants 15-29-01344). We wish to thank E.V. Melnichuk for his participation in the experiments.

References

Allison, B., Luth, T., Valbuena, D., Teymourian, A., Volosyak, I., & Graser, A. (2010). BCI demographics: How many (and what kinds of) people can use an SSVEP BCI? IEEE Transactions on Neural Systems and Rehabilitation Engineering,18(2), 107–116. doi: 10.1109/TNSRE.2009.2039495

Aminaka, D., Makino, S., & Rutkowski, T. M. (2015). Chromatic and high-frequency cVEP-based BCI paradigm. Engineering in Medicine and Biology Society (EMBC), 2015 37th Annual International Conference of the IEEE(pp. 1906–1909). IEEE Press. doi: 10.1109/EMBC.2015.7318755

Baldwin, D. A. (1995). Understanding the link between joint attention and language. In C. Moore & P. J. Dunham (Eds.). Joint attention: Its origins and role in development(pp. 131–158).Hillsdale, NJ: Lawrence Erlbaum.

Bergson, H. (2006). L'Évolution créatrice. Paris: PUF. (Original work published 1907)

Bin, G., Gao, X., Wang, Y., Li, Y., Hong, B., & Gao, S. (2011). A high-speed BCI based on code modulation VEP. Journal of Neural Engineering, 8(2), 025015. doi: 10.1088/1741-2560/8/2/025015

Bolt, R. A. (1982). Eyes at the interface. Proceedings of 1982 Conference on Human Factors in Computing Systems(pp. 360–362). New York: ACM Press. doi: 10.1145/800049.801811

Bolt, R. A. (1990). A gaze-responsive self-disclosing display. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems(pp. 3–10). New York: ACM Press.

Bowsher, K., Civillico, E. F., Coburn, J., Collinger, J., Contreras-Vidal, J. L., Denison, T., ... & Hoffmann, M. (2016). Brain–computer interface devices for patients with paralysis and amputation: A meeting report. Journal of Neural Engineering,13(2), 023001. doi: 10.1088/1741-2560/13/2/023001

Brielmann, A. A., & Spering, M. (2015). Effects of reward on the accuracy and dynamics of smooth pursuit eye movements. Journal of Experimental Psychology: Human Perception and Performance,41(4), 917–928. doi: 10.1037/a0039205

Brouwer, A. M., Reuderink, B., Vincent, J., van Gerven, M. A., & van Erp, J. B. (2013). Distinguishing between target and nontarget fixations in a visual search task using fixation-related potentials. Journal of Vision.13:17. doi: 10.1167/13.3.17

Brunia, C. H. M., & Van Boxtel, G. J. M. (2001). Wait and see. International Journal of Psychophysiology,43, 59–75. doi: 10.1016/S0167-8760(01)00179-9

Brunner, C., Birbaumer, N., Blankertz, B., Guger, C., Kübler, A., Mattia, D., ... & Ramsey, N. (2015). BNCI Horizon 2020: Towards a roadmap for the BCI community. Brain-Computer Interfaces, 2(1), 1–10.

Carpenter, M., & Liebal, K. (2011). Joint attention, communication, and knowing together in infancy. In A. Seemann (Ed.), Joint attention: New developments in psychology, philosophy of mind, and social neuroscience(pp. 159–181). Cambridge, MA: MIT Press.

Chen, X., Wang, Y., Nakanishi, M., Gao, X., Jung, T. P., & Gao, S. (2015). High-speed spelling with a noninvasive brain–computer interface. Proceedings of the National Academy of Sciences, 112(44), E6058-E6067. doi: 10.1073/pnas.1508080112

Collinger, J. L., Wodlinger, B., Downey, J. E., Wang, W., Tyler-Kabara, E. C., Weber, D. J., . . . & Schwartz, A. B. (2013). High-performance neuroprosthetic control by an individual with tetraplegia. The Lancet, 381(9866), 557–564. doi: 10.1016/S0140-6736(12)61816-9

DiDomenico, A., & Nussbaum, M. A. (2011). Effects of different physical workload parameters on mental workload and performance. International Journal of Industrial Ergonomics, 41(3), 255–260. doi: 10.1016/j.ergon.2011.01.008

Dole, L. D., Sirkin, D. M., Currano, R. M., Murphy, R. R. & Nass, C. I. (2013). Where to look and who to be: Designing attention and identity for search-and-rescue robots. Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction(pp. 119–120). IEEE Press. doi: 10.1109/HRI.2013.6483530

Engelbart, D. C. (1962). Augmenting human intellect: A conceptual framework.SRI Summary Report AFOSR-322.

Ehrlichman, H., & Micic, D. (2012). Why do people move their eyes when they think? Current Directions in Psychological Science, 21(2), 96–100. doi: 10.1177/0963721412436810

Esteves, A., Velloso, E., Bulling, A., & Gellersen, H. (2015). Orbits: Gaze interaction for smart watches using smooth pursuit eye movements. Proc. 28th Annual ACM Symposium on User Interface Software & Technology(pp. 457–466). New York: ACM Press. doi: 10.1145/2807442.2807499

Fedorova, A.A., Shishkin, S.L., Nuzhdin, Y.O., Faskhiev, M.N., Vasilyevskaya, A.M., Ossadtchi, A.E., . . . Velichkovsky, B.M. (2014). A fast "single-stimulus" brain switch. In G. Muller-Putz et al. (Eds.), Proceedings of 6th International Brain-Computer Interface Conference(Article 052). Graz: Verlag der Technischen Universität Graz. doi:10.3217/978-3-85125-378-8-52

Fedorova, A. A., Shishkin, S. L., Nuzhdin, Y. O., & Velichkovsky, B. M. (2015). Gaze based robot control: The communicative approach. In 7th International IEEE/EMBS Conference onNeural Engineering (NER). (pp. 751–754). IEEE Press.

Findlay, J. M., & Gilchrist, I. D. (2003). Active vision: The psychology of looking and seeing.Oxford: Oxford University Press. doi: 10.1093/acprof:oso/9780198524793.001.0001

Gao, S., Wang, Y., Gao, X., & Hong, B. (2014). Visual and auditory brain–computer interfaces. IEEE Transactions on Biomedical Engineering, 61(5), 1436–1447. doi: 10.1109/TBME.2014.2300164

Guger, C., Allison, B. Z., Großwindhager, B., Prückl, R., Hintermüller, C., Kapeller, C., . . . & Edlinger, G. (2012). How many people could use an SSVEP BCI? Frontiers in Neuroscience, 6, 169. doi: 10.3389/fnins.2012.00169

Heider, F., & Simmel, M. (1944). An experimental study of apparent behavior. TheAmerican Journal of Psychology, 57(2), 243–259. doi: 10.2307/1416950

Helmert, J. R., Pannasch, S. & Velichkovsky, B. M. (2008). Influences of dwell time and cursor control on the performance in gaze driven typing. Journal of Eye Movement Research, 2(4), 3, 1–8.

Huang, J., White, R., & Buscher, G. (2012). User see, user point: Gaze and cursor alignment in web search. Proceedings of SIGCHI Conference on Human Factors in Computing Systems(pp. 1341–1350). New York: ACM Press. doi: 10.1145/2207676.2208591

Ihme, K., & Zander, T. O. (2011). What you expect is what you get? Potential use of contingent negative variation for passive BCI systems in gaze-based HCI. Affective computing and intelligent interaction(ACII 2011), 447–456. doi: 10.1007/978-3-642-24571-8_57

Jacob, R. J. (1991). The use of eye movements in human-computer interaction techniques: What you look at is what you get. ACM Transactions on Information Systems (TOIS), 9(2), 152–169. doi: 10.1145/123078.128728

Jacob, R. J. (1993). Eye movement-based human-computer interaction techniques: Toward non-command interfaces. Advances in Human-Computer Interaction, 4, 151–190.

Jacob, R. J., & Karn, K. S. (2003). Eye tracking in human-computer interaction and usability research: Ready to deliver the promises. In R. Radach, J. Hyona, & H. Deubel (Eds.), The mind’s eye: Cognitive and applied aspects of eye movement research(pp. 573–605). Oxford, UK: Elsevier. doi: 10.1016/B978-044451020-4/50031-1

Johansson, R. S., Westling, G., Bäckström, A., & Flanagan, J. R. (2001). Eye–hand coordination in object manipulation. Journal of Neuroscience, 21(17), 6917–6932.

Kamienkowski, J. E., Ison, M. J., Quiroga, R. Q., & Sigman, M. (2012). Fixation-related potentials in visual search: A combined EEG and eye tracking study. Journal of Vision,12, 4. doi: 10.1167/12.7.4

Kaplan, A. Y., Shishkin, S. L., Ganin, I. P., Basyul, I. A., & Zhigalov, A. Y. (2013). Adapting the P300-based brain–computer interface for gaming: A review. IEEE Transactions on Computational Intelligence and AI in Games, 5(2), 141–149. doi: 10.1109/TCIAIG.2012.2237517

Keane, B., & Pylyshyn, Z. W. (2006). Is motion extrapolation employed in multiple object tracking? Tracking as a low-level, non-predictive function. Cognitive Psychology, 52(4), 346–368. doi: 10.1016/j.cogpsych.2005.12.001

Kolling, A., Nunnally, S., & Lewis, M. (2012). Towards human control of robot swarms. InProceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction(pp. 9–96). New York: ACM Press. doi: 10.1145/2157689.2157704

Korsun, O. V., Shishkin, S. L., Medyntsev, A. A., Fedorova, A. A., Nuzhdin, Y. O., Dubynin, I. A., & Velichkovsky, B. M. (2017). The EEG marker for the intentional gaze dwells used to interact with a computer is independent of horizontal gaze direction. Proceedings of the 4th Conference “Cognitive Science in Moscow: New Studies”, 428–433.

Lahr, J., Schwartz, C., Heimbach, B., Aertsen, A., Rickert, J., & Ball, T. (2015). Invasive brain–machine interfaces: A survey of paralyzed patients’ attitudes, knowledge and methods of information retrieval. Journal of Neural Engineering, 12(4), 043001. doi: 10.1088/1741-2560/12/4/043001

Land, M., Mennie, N., & Rusted, J. (1999). The roles of vision and eye movements in the control of activities of daily living. Perception, 28(11), 1311–1328. doi: 10.1068/p2935

Lebedev, M. A., & Nicolelis, M. A. (2017). Brain-Machine Interfaces: From basic science to neuroprostheses and neurorehabilitation. Physiological Reviews, 97(2), 767–837. doi: 10.1152/physrev.00027.2016

Lesenfants, D., Habbal, D., Lugo, Z., Lebeau, M., Horki, P., Amico, E., ... & Laureys, S. (2014). An independent SSVEP-based brain–computer interface in locked-in syndrome. Journal of Neural Engineering, 11(3), 035002. doi: 10.1088/1741-2560/11/3/035002

Liebling, D. J., & Dumais, S. T. (2014). Gaze and mouse coordination in everyday work. Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication(pp. 1141–1150). New York: ACM Press. doi: 10.1145/2638728.2641692

Majaranta, P., & Bulling, A. (2014). Eye tracking and eye-based human–computer interaction. In S. H. Fairclough and K. Gilleade (Eds.), Advances in physiological computing: Human–computer interaction series. (pp.39–65). London: Springer-Verlag. doi: 10.1007/978-1-4471-6392-3_3

Manyakov, N. V., Chumerin, N., & Van Hulle, M. M. (2012). Multichannel decoding for phase-coded SSVEP brain–computer interface. International Journal of Neural Systems, 22(05), 1250022. doi: 10.1142/S0129065712500220

Mojzisch, A., Schilbach, L., Helmert, J. R., Velichkovsky, B.M., & Vogeley, K. (2007). The effects of self-involvement on attention, arousal, and facial expression during social interaction with virtual others. SocialNeuroscience, 1(1), 184–195.

Neggers, S. F., & Bekkering, H. (2000). Ocular gaze is anchored to the target of an ongoing pointing movement. Journal of Neurophysiology, 83(2), 639–651.

Nuzhdin, Y. O., Shishkin, S. L., Fedorova, A. A., Trofimov, A. G., Svirin, E. P., Kozyrskiy, B. L., . . . Velichkovsky, B. M. (2017a). The expectation-based Eye-Brain-Computer Interface: An attempt of online test. Proceedings of the 2017 ACM Workshop on An Application-Oriented Approach to BCI Out of the Laboratory(pp. 39–42). New York: ACM Press. doi: 10.1145/3038439.3038446

Nuzhdin, Y. O., Shishkin, S. L., Fedorova, A. A., Kozyrskiy, B. L., Medyntsev, A. A., Svirin, . . . Velichkovsky, B. M. (2017 in press). Passive detection of feedback expectation: Towards fluent hybrid eye-brain-computer interfaces. Proceedings of the 7th International Brain-Computer Interface Conference.Graz: Verlag der Technischen Universität Graz.

Pannasch, S., Helmert, J.R., Malischke, S., Storch, A., & Velichkovsky, B.M. (2008). Eye typing in application: A comparison of two systems with ALS patients. Journal of Eye Movement Research, 2(4):6, 1–8.

Parr, T., & Friston, K. J. (2017). The active construction of the visual world. Neuropsychologia, 104, 92–101. doi: 10.1016/j.neuropsychologia.2017.08.003

Protzak J., Ihme, K., & Zander, T. O. (2013). A passive brain-computer interface for supporting gaze-based human-machine interaction. International Conference on Universal Access in Human-Computer Interaction(pp.662–671). Heidelberg: Springer. doi: 10.1007/978-3-642-39188-0_71

Rutkowski, T. M. (2016). Robotic and virtual reality BCIs using spatial tactile and auditory oddball paradigms. Frontiers in Neurorobotics, 10, 20. doi: 10.3389/fnbot.2016.00020

Rutkowski, T. M., & Mori, H. (2015). Tactile and bone-conduction auditory brain computer interface for vision and hearing impaired users. Journal of Neuroscience Methods, 244, 45–51. doi: 10.1016/j.jneumeth.2014.04.010

Schrammel, F., Graupner, S.-T., Mojzisch, A., & Velichkovsky, B. M. (2009). Virtual friend or threat? The effects of facial expression and gaze interaction on physiological responses and emotional experience. Psychophysiology, 46(5), 922–931. doi: 10.1111/j.1469-8986.2009.00831.x

Sellers, E. W., Vaughan, T. M., & Wolpaw, J. R. (2010). A brain-computer interface for long-term independent home use. Amyotrophic Lateral Sclerosis, 11(5), 449–455. doi: 10.3109/17482961003777470

Shishkin, S. L., Fedorova, A. A., Nuzhdin, Y. O., Velichkovsky, B. M. (2014). Upravleniye robotom s pomoschyu vzglyada: kommunikativnaya paradigma [Gaze-based control in robotics: The communicative paradigm.] In B.M. Velichkovsky, V.V. Rubtsov, D.V. Ushakov (Eds.), Cognitive Studies, 6, 105–128.

Shishkin, S. L., Ganin, I. P., Basyul, I. A., Zhigalov, A. Y., & Kaplan, A. Y. (2009). N1 wave in the P300 BCI is not sensitive to the physical characteristics of stimuli. Journal of Integrative Neuroscience, 8(4), 471–485. doi: 10.1142/S0219635209002320

Shishkin, S. L., Fedorova, A. A., Nuzhdin, Y. O., Ganin, I. P., Ossadtchi, A. E., Velichkovsky, B. B., . . . Velichkovsky, B. M. (2013). Na puti k vysokoskorostnym interfeysam glaz-mozg-kompyuter: sochetaniye “odnostimulnoy” paradigm I perevoda vzglyada [Toward high-speed eye-brain-computer interfaces: Combining the single-stimulus paradigm and gaze reallocation]. Vestnik Moskovskogo Universiteta. Seriya 14: Psikhologiya [Moscow University Psychology Bulletin], 4, 4–19.

Shishkin, S. L., Kozyrskiy, B. L., Trofimov, A. G., Nuzhdin, Y. O., Fedorova, A. A., Svirin, E. P., & Velichkovsky, B. M. (2016a). Improving eye-brain-computer interface performance by using electroencephalogram frequency components. RSMU Bulletin,2, 36–41.

Shishkin, S. L., Nuzhdin, Y. O., Svirin, E. P., Trofimov, A. G., Fedorova, A. A., Kozyrskiy, B. L., & Velichkovsky, B. M. (2016b). EEG negativity in fixations used for gaze-based control: Toward converting intentions into actions with an Eye-Brain-Computer Interface. Frontiers in Neuroscience, 10, 528. doi: 10.3389/fnins.2016.00528

Sibert, L. E., & Jacob, R. J. K. (2000). Evaluation of eye gaze interaction. In Proceedings of the ACM CHI 2000 Human Factors in Computing Systems Conference, 281–288. New York: Addison-Wesley/ACM Press. doi: 10.1145/332040.332445

Tomasello, M. (1999). The cultural origins of human cognition. New York: Harvard University Press.

Treder, M. S., & Blankertz, B. (2010). (C) overt attention and visual speller design in an ERP-based brain-computer interface. Behavioral and Brain Functions, 6(1), 28. doi: 10.1186/1744-9081-6-28

Ušćumlić, M., & Blankertz, B. (2016). Active visual search in non-stationary scenes: coping with temporal variability and uncertainty. Journal of Neural Engineering,13:016015. doi: 10.1088/1741-2560/13/1/016015

Velichkovsky, B. M. (1994). Towards pragmatics of human-robot interaction (Keynote address to the Congress). In J. Arnold, & J.M. Prieto (Eds.), Proceeding of the 23rd International Congress of Applied Psychology, 12–15. Washington, DC: APA Press.

Velichkovsky, B. M. (1995). Communicating attention: Gaze position transfer in cooperative problem solving.Pragmatics and Cognition, 3(2), 199–224. doi: 10.1075/pc.3.2.02vel

Velichkovsky, B. M., Nuzhdin, Y. O., Svirin, E. P., Stroganova, N.A., Fedorova, A. A., & Shishkin, S. L.(2016). Upravlenie silij mysli: Na puti k novym formam vzaimodejstvija cheloveka s technicheskimi ustrojstvami [Control by “power of thought”: On the way to new forms of interaction between human and technical devices]. Voprosy Psikhologii [Issue of Psychology], 62(1),79–88.

Velichkovsky, B. M., Cornelissen, F., Geusebroek, J.-M., Graupner, S.-Th., Hari, R., Marsman, J.B., & Shevchik, S.A. (2012). Measurement-related issues in investigation of active vision. In B. Berglund, G.B. Rossi, J. Townsend, & L. Pendrill (Eds.), Measurement with persons: Theory and methods(pp. 281–300). London/New York: Taylor and Francis.

Velichkovsky, B. M., & Hansen, J. P. (1996). New technological windows into mind: There is more in eyes and brains for human-computer interaction (Keynote address to the Conference). Proceedings of ACM CHI-96: Human factors in computing systems(pp. 496–503). New York: ACM Press.

Velichkovsky, B. M., Pomplun, M., & Rieser. H. (1996). Attention and communication: Eye-movement-based research paradigms. In W.H. Zangemeister, S. Stiel & C. Freksa (Eds.), Visual attention and cognition, 125–254. Amsterdam/New York: Elsevier. doi:10.1016/S0166-4115(96)80074-4

Velichkovsky, B. M., Sprenger, A., & Unema, P. (1997). Towards gaze-mediated interaction: Collecting solutions of the “Midas touch problem”. In S. Howard, J. Hammond, and G. Lindgaard (Eds.), Human-Computer Interaction INTERACT’97(pp. 509–516). London: Chapman and Hall. doi: 10.1007/978-0-387-35175-9_77

Vidal, J. J. (1973). Toward direct brain-computer communication. Annual Review of Biophysics and Bioengineering,2(1), 157–180. doi: 10.1146/annurev.bb.02.060173.001105

Waldert, S. (2016). Invasive vs. non-invasive neuronal signals for brain-machine interfaces: Will one prevail? Frontiers of Neuroscience,10, 295. doi: 10.3389/fnins.2016.00295

Walcher, S., Körner, C., & Benedek, M. (2017). Looking for ideas: Eye behavior during goal-directed internally focused cognition. Consciousness and Cognition, 53, 165–175. doi: 10.1016/j.concog.2017.06.009

Walter, W. G. (1966). Expectancy wavesand intentionwavesin the human brain and their application to the direct cerebral control of machines. Electroencephalography and Clinical Neurophysiology, 21, 616.

Wang, R. I., Pelfrey, B., Duchowski, A. T., & House, D. H. (2014). Online 3D gaze localization on stereoscopic displays. ACM Transactions on Applied Perception, 11(1), 1–21. doi: 10.1145/2593689

Ware, C., & Mikaelian, H. T. (1987). An evaluation of an eye tracker as a device for computer input. Proceedings of the ACM CHI+GI’87 Human Factors in Computing Systems Conference, 183–188. New York: ACM Press.

Weber, S., Schubert, R. S., Vogt, S., Velichkovsky, B. M., & Pannasch, S. (2017 in press). Gaze3DFix: Detecting 3D fixations with an ellipsoidal bounding volume. Behavior Research Methods.

Wodlinger, B., Downey, J. E., Tyler-Kabara, E. C., Schwartz, A. B., Boninger, M. L., & Collinger, J. L. (2014). Ten-dimensional anthropomorphic arm control in a human brain−machine interface: Difficulties, solutions, and limitations. Journal of Neural Engineering, 12(1), 016011. doi: 10.1088/1741-2560/12/1/016011

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., & Vaughan, T. M. (2002). Brain–computer interfaces for communication and control. Clinical Neurophysiology, 113(6), 767–791. doi: 10.1016/S1388-2457(02)00057-3

Xu, S., Zhang, S., & Geng, H. (2011). Gaze-induced joint attention persists under high perceptual load and does not depend on awareness.Vision Research, 51(18), 2048–2056. doi: 10.1016/j.visres.2011.07.023

Yarbus, A. L. (1967). Eye movements and vision. New York: Plenum Press. doi: 10.1007/978-1-4899-5379-7

Zander, T. O., & Kothe, C. (2011). Towards passive brain–computer interfaces: applying brain–computer interface technology to human–machine systems in general. Journal of Neural Engineering, 8(2), 025005. doi: 10.1088/1741-2560/8/2/025005

Zhao, D. G., Melnichuk, E. V., Isachenko, A. V., & Shishkin, S. L. (2017). Vybor robota iz mobilnoy gruppirovki s pomoschyu vzglyada [Gaze-based selection of a robot from a mobile group]. Proceedings of the 4th Conference “Cognitive Science in Moscow: New Studies”, 577–581.

Zhu, D., Gedeon, T., & Taylor, K. (2010). Head or gaze? Controlling remote camera for hands-busy tasks in teleoperation: A comparison. Proceedings of the 22nd Conference Computer-Human Interaction Special Interest Group of Australia on Computer-Human Interaction, 300–303. New York: ACM Press. doi: 10.1145/1952222.1952286

To cite this article: Shishkin S. L., Zhao D. G., Isachenko A. V., Velichkovsky B. M.(2017). Gaze-and-brain-controlled interfaces for human-computer and human-robot interaction. Psychology in Russia: State of the Art, 10 (3), 120-137.

The journal content is licensed with CC BY-NC “Attribution-NonCommercial” Creative Commons license.